Compound Learnings From Your Experimentation Program With An End Of Year Roadmapping Exercise

Before you build next year’s testing roadmap, here are 3 steps to make sure your efforts from last year are taken into account. Follow them and your learnings will compound.

A dream without a plan is just a wish. You’ve probably heard this from a coach or mentor at some point, and that’s because it is applicable to most things in life. I’m here to remind you that it’s also true for improving your digital experience and optimization process.

You have a goal to deliver the best experience in your industry, whether it’s ecommerce, SaaS, digital media, or something else. You want to convert more of your visitors, grow your user base, or increase subscribers.

If you’re thinking, “I already have a plan and plenty of experiments in progress.” First, congratulations! We love to hear that. And second, this is actually the perfect article for you.

I’m sharing a step-by-step exercise for the end of the year that takes learnings from past experiments or tests and helps you leverage them to compound results.

This will inform the plan that will turn your dream of a better digital experience for your customers into a reality.

Why should you be thinking about this now?

As Q3 quickly comes to a close, the holiday season and planning for next year are right around the corner.

For most ecommerce, SaaS, or digital media companies, you’re probably beginning some sort of holiday campaign that was planned months ago.

The end of the year is mapped out, so make time on your calendar next quarter to start considering your roadmap for next year. What are your goals for your digital experience? And how are you learning from the past year to inform the next steps to reach them?

For many companies, the best way to start answering these questions is with a three-step optimization process that reviews your digital experience and tests from the past year to help inform your plan for the future.

We recently went through this optimization process with a client, so let’s take a look at what this looks like in action.

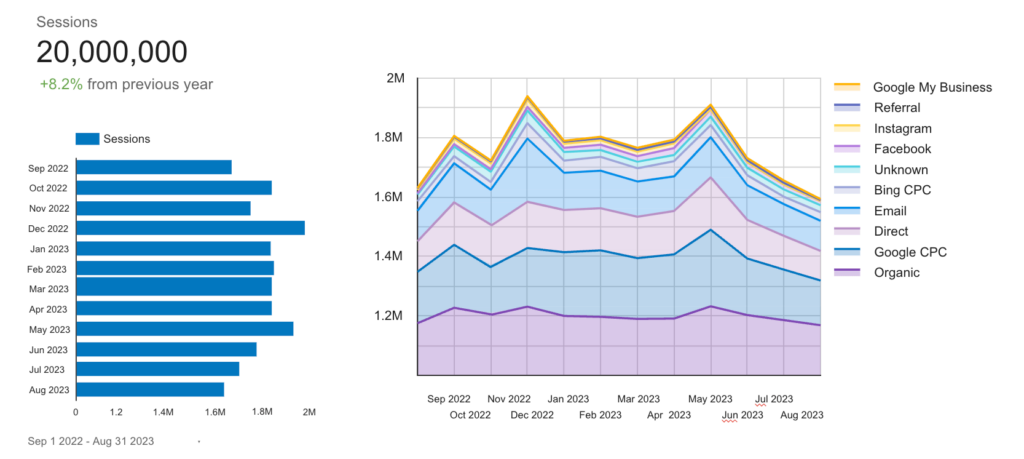

Step 1: Review Key Analytics Data

The first step in the optimization process is to review progress from the year.

Specifically, run a data report in Google Analytics for a 12-month period and pay special attention to the following:

- How session count changed over the year

- Where that traffic came from

- How conversions changed by:

- Device type

- Top user groups

- Channel groupings

- KPIs

This helps you understand where there was growth and where to focus your efforts next year.

Here are what some of the key learnings from the analysis might look like:

Example Key Learning #1

Learning: Traffic trends show the highest session count in X month

Details: Breakdown by source and medium shows a decrease in session count YOY from Google organic and CPC, with a larger increase in traffic associated with social campaigns, email, etc.

Opportunity: Prep for email campaign for mid-year

Example Key Learning #2

Learning: X% increase in goal conversion rate compared to the previous year.

Details: There was an increase in overall goal completions compared to the previous year, slowing down in X months. We see similar growth in mobile and desktop conversion rates (+X%, X%)

Opportunity: Examine mobile experience to continue growth in this area

Example Key Learning #3

Learning: Form submissions increased

Details: Looking at the form submission trends, we see an increase across all goals with the largest impact on X form submissions

Opportunity: Revisit form analysis on key landing pages

These learnings and the associated details give the context that will inform any seasonal opportunities, growth areas, or sticking points.

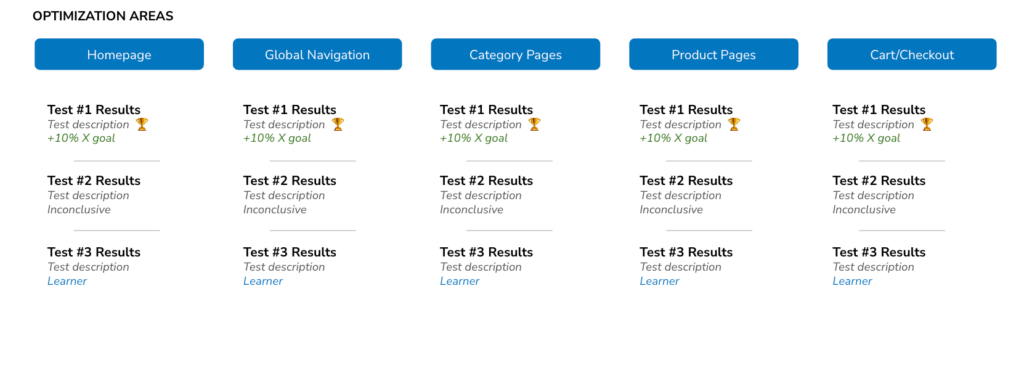

Step 2: Review Tests From The Past Year

Next, if you have an active conversion optimization or experimentation program, review:

- All of the tests that you have run in the past year

- The results of those tests

- The learnings from the results

Each learning can become a follow-up concept, with the metric results gathered from the test helping prioritize a roadmap.

For example, if a test on a certain element of the site did not produce a huge impact on conversions, the learning may be that you need to make bigger changes in that area of the site in order to have an impact. This is something you want to pull out and keep in mind if you decide to test other optimizations to that element next year.

A good process for this step is to outline all of the tests that you have run, then one-by-one refresh yourself and your team on the hypothesis, background, and learnings from the experiments.

If you’re presenting this to the C-suite or other organizational stakeholders, we’d recommend you start with an overview slide with site area and key metrics and then one slide per test to get into the details. Review the main highlights and only get into the details if they are particularly relevant (or if your stakeholders are interested).

Step 3: Identify Pending Questions From Experiments and Conduct New Research

The next step is to identify any pending questions from your past experiments and supplement them with research as necessary.

For example, you may want to run new session recordings or user tests on winning or losing variants of experiments to understand what additional opportunities for optimization or why your hypothesis may have failed.

In action, this could look something like this. If the mobile variant of a paid landing page test didn’t perform as well as the desktop variant, you may establish new research questions and run additional user testing.

In this scenario, let’s say you revisited session recordings of users who opted into the mobile variant of your experiment. You can see users are missing the main CTA and then abandoning. This behavior suggests that users do not feel like this is the primary action that they should take on the page, so there may be an opportunity to rework how you present the CTA in your next iteration of this test to position the CTA to guide users towards that goal.

By reviewing what was learned in the first test (that the mobile variant wasn’t performing as well as desktop) and supplementing the research, it became a compound learning (identified that there wasn’t a clear enough CTA).

This illustrates how the exercise can offer new opportunities for a future roadmap. You have the background from original research, you’re armed with insights from the variant that didn’t perform like we hypothesized. You’ll be able able to apply that to a future roadmap.

In summary, after reviewing tests, do an experience review of key pages, based on past learnings and current user behavior. Use that information to compound learnings and identify opportunities to build a better user experience.

(Bonus) Step 4: Prioritize Opportunities & Allow Your Learnings to Compound

Now that you’ve done the 3-step optimization process, it’s time to ideate on opportunities. This is also where you begin to prioritize a roadmap for the following year.

Here is a bonus, in-depth article to guide you through the process: How to Build an Efficient A/B Testing Roadmap.

The goal of this step is to get your roadmap together, taking into account the exercises we just went through. So, make sure your roadmap includes:

- Opportunities identified by analytics data from the previous year

- Follow-up concepts based on tests you ran last year

- Uncovered sticking points from your experience review of key pages

- Any testing opportunities you have put off to revisit in the following year

The deliverable can look something like the above. It should outline the areas of the site you want to test, prioritized by what concept you would run.

Consistency Compounds, So Keep Your Learnings Top Of Mind All Year Long

That’s a peek into some of our strategies for making sure learnings compound YoY. The longer you run an experimentation program, the better your experience becomes. This is because you’re consistently compounding your learnings about users and improving your optimization process.

To summarize, before you create next year’s roadmap, make sure to:

- Review key analytics data

- Review tests from the past year

- Identify pending questions and supplement them with research

The exercise of reviewing your site data, experiments, and learnings from the past year will help you build on any optimizations to create an even better digital experience.

Keep in mind that visibility into learnings can help guide future concepts throughout the year, not just at the end. A great way to do this is to keep a dashboard of your key data, learnings, and insights handy. But, that’s a topic for a different day.

If you’d like your learnings to compound and your wins or results to do the same, we can help you launch or expand your experimentation program. The Good works with ecommerce and product marketing teams to optimize the digital experience with research, validation, and implementation. Get in touch here.

Enjoying this article?

Subscribe to our newsletter, Good Question, to get insights like this sent straight to your inbox every week.

About the Author

Maggie Paveza

Maggie Paveza is a Strategist at The Good. She has years of experience in UX research and Human-Computer Interaction, and acts as an expert on the team in the area of user research.