How to Build an Efficient A/B Testing Roadmap

A well-structured testing roadmap is essential to operating an efficient optimization program. Here's how to build one.

If you’re thinking about starting an optimization program for your digital product, you’re likely inundated with questions about how to get started – specifically, how to build an efficient A/B testing roadmap.

How should you decide what to optimize on your site, and in what order? How do you identify high-value testing opportunities? How can you prevent multiple tests from interfering with each other?

The optimization process can be a bit overwhelming for any stage of business, which is why creating an A/B testing roadmap is such a critical step. The process of creating an optimization roadmap is essential because it requires you to define your goals, align with stakeholders, and assess priorities and risks; it’s not just about outlining a testing schedule.

Whether you’re a researcher, an analyst, a marketer, or an optimization specialist, this insight is designed to connect those from any discipline with the clear steps they need to create an optimization roadmap. We’ll cover how to:

- Identify clear objectives for your testing program

- Establish website challenges

- Isolate testing opportunities

- Formulate testing hypotheses

- Prioritize your testing opportunities

Start with clear objectives

To set a testing program off to a good start, teams and individuals should make sure they are aligned on a clear understanding of what they are hoping to achieve within a testing program. The objectives you select may depend on a number of factors including the maturity of your brand, the saturation of the market, and the competitive landscape.

Examples of common A/B testing roadmap objectives include:

- Improve conversion rate to purchase

- Increase average order value

- Increase new user product engagement

For teams just starting out, we recommend focusing on only 1-3 primary testing objectives and ranking those in order of priority. Increasing conversion rates might be more important to your team than improving email signups, so knowing where to put your time and attention and aligning across the testing team (and other stakeholders) is a non-negotiable first step.

Conduct research to establish website challenges

When you’re aligned on what you’re aiming to improve with your testing program, start the research. Research is conducted to set baselines, surface patterns, and establish challenges.

There are two types of research, and both are important: quantitative and qualitative.

Quantitative research techniques are used to establish metric baselines and surface potential friction points in the user journey. Qualitative research adds a human element to data patterns; It tells a story that the data sometimes can’t on its own.

Quantitative Research: Every team approaches research differently, but our method relies on starting with the data. By looking at one to two years of analytics reports, we form hypotheses for what’s happening across the user journey. Scope should include reviewing things like channel mix, landing pages, time-on-site, and events across the site experience.

After a thorough data analysis, you should have a good understanding of two things: optimization areas and baseline metrics.

- Optimization Areas are the pages or areas of the site that can be optimized to improve the customer experience and influence your established goals.

- Baseline Metrics are numbers that represent how your product or website is performing today in areas that are important to you. (Advanced reports will include how those metrics change based on traffic channel, device type, landing page, or seasonal fluctuation.)

Qualitative Research: When it comes to telling a story with the data, we look to qualitative research. Qualitative methodologies like conducting user testing, cataloging session recordings, and designing open format surveys are non-negotiable for our team; while the data can show us where users are dropping off, qualitative methods tell us why.

State the challenges and isolate A/B testing roadmap opportunities

After thorough research, you should have clarity on the established challenges. For our team, this manifests in a literal list of friction points that need to be addressed, but for the purpose of demonstration, let’s imagine that we wrote all of our challenges on yellow sticky notes.

A note on challenges: as you compile a database of challenges stay user-focused, rather than product-focused. The problem with product-focused challenges is that they tend to hint at a solution that you’ve already thought up. The prescriptive nature of product-focused challenges will have you optimizing through brute force rather than thoughtful finessing. User-focused challenges work to address user needs and improve the customer experience. By focusing on user needs you’ll unearth new ways to solve the challenges presented to you in the research phase. So stay user-focused.

Looking at the well-researched, established challenges in front of you, it’s time to divide and conquer.

Enjoying this article?

Subscribe to our newsletter, Good Question, to get insights like this sent straight to your inbox every week.

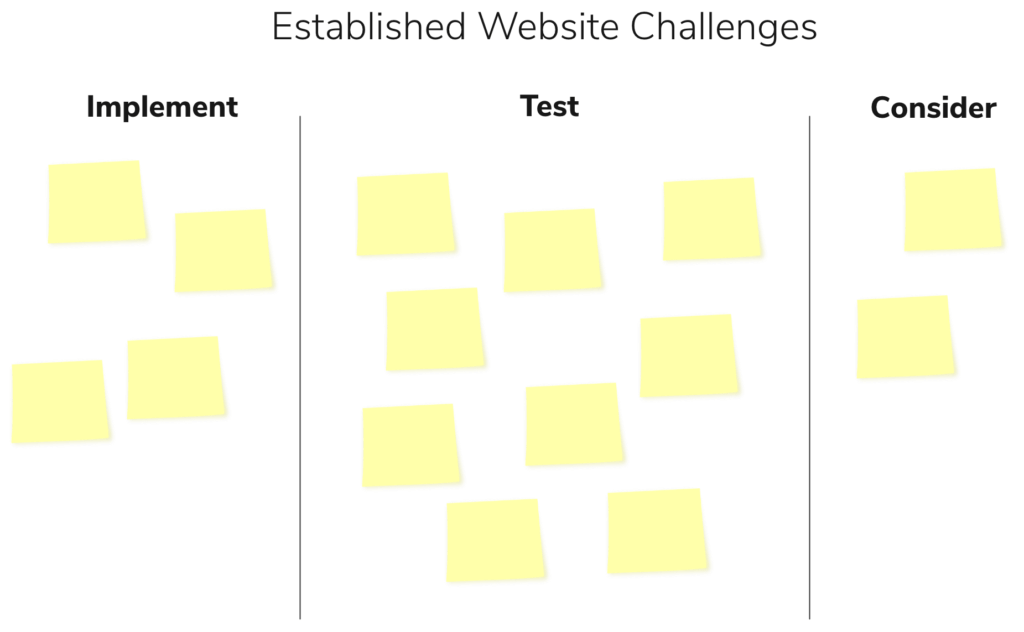

When it comes to sussing out what makes a good testing opportunity, our team uses a combination of a point system and a gravity method, but every team does this differently. A simple version of defining testing opportunities is to simply put challenges into three buckets: Implement, Test, & Consider.

- Implement. This bucket should contain all of the problems that are so low-risk that it’s an easy decision to just solve them immediately.

- Examples: website bugs, form field errors, and missing content.

- Test. This bucket is generally the largest group of concepts. Use this bucket for challenges where the solution may be less clear, the challenge could be solved with multiple solutions, or the test itself will teach us something valuable about our audience.

- Examples: hero messaging where internal interests are divided, filter layouts where the important filter categories are undefined.

- Consider. This bucket is usually a small but mighty list of challenges just not suited for testing, either requiring deeper consideration or great resources to address.

- Examples: platform limitations, reshooting product images, or rethinking product names.

Step away and get inspired

After compiling a deep bench of testing opportunities, much of the hard work (for this round) is done, so give yourself a pat on the back, acknowledge the milestone, and go explore. This is where a hunger for good user experiences comes in handy.

In order to gain some fresh perspective, it’s at this point that we recommend stepping away from the problem in front of you. This could mean looking at competitive user experiences, drawing on your experience in the real-world, or sleeping on the problem.

One way our team at The Good formalizes this process is with a collaborative weekly meeting where we evaluate web experiences for three buckets of content: stealable, not stealable, and questionable. This open-format approach tends to be quite fun, and it’s been a great way to maintain a culture of experimentation and collaboration. These sessions train our eyes and inspire debate, but they also fuel inspiration that we bring to the design phase. Win-win!

As you explore, take note of the moments when you say “that might work for this challenge” – those are the hypotheses forming and they represent the spark of inspiration. You’ll capture your hypotheses in the next step.

Formulate Testing Hypotheses

I hesitate to call hypothesizing a whole step unto itself, because for our team, hypotheses are an important part of the design process; the design and hypothesis happen in a constant dance where who’s in the lead can shift and change.

Maggie Paveza, Strategist here at The Good says:

“I typically have the ‘what I hope to impact’ part of the hypothesis down as a result of the research phase, but the ‘how I plan to do it’ really comes out of creating the design or having a seed of an idea.”

Whether the chicken or the egg comes first, the important step here is to catalog a hypothesis and attach it (either physically on post-its, or digitally in a project management tool) to the challenge that it’s solving for.

This assures that you don’t lose sight of the user challenge, which is our driving force, after all!

Helpful Hint: Hypotheses are not simply the inverse of challenges. Remember how we advised on surfacing user-focused challenges? A user-focused challenge can inspire multiple hypotheses, and a hypothesis can solve for multiple challenges. Read more on crafting a good hypothesis.

Prioritize your tests

Once your testing opportunities are defined and you’ve accumulated several worthy testing hypotheses, many folks will want to jump right into design. But you probably don’t have the resources or desire to just start designing every solution at once. This is where careful A/B testing roadmap prioritization comes in.

Be warned that prioritizing is not always simple. For individuals with some experience, identifying the biggest opportunities will probably be second-nature. For teams however, there are usually politics involved, which is where various established prioritization models* can come in handy.

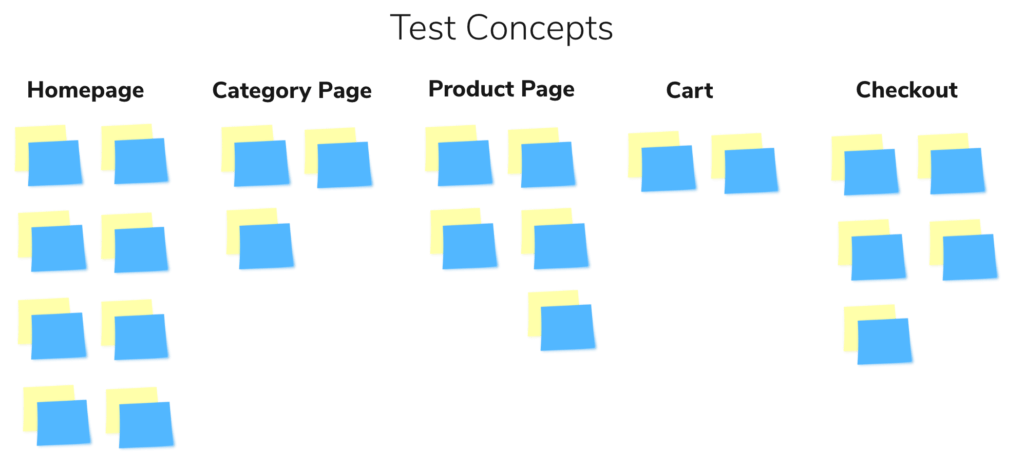

For those just beginning a testing program, we recommend keeping it simple: organize your tests by funnel point (or page) and select a few particularly exciting opportunities across various points of the conversion funnel. This assures you’ll minimize decision paralysis, and it has the benefit of keeping your team motivated; Working on what’s exciting will keep your team invested in the process long enough to gain momentum.

Prioritize, design, critique, then repeat

Once you have a prioritized list of testing opportunities, congratulations! You have created a testing roadmap. But simply having a roadmap does not mean that the prioritization is done.

At this point, you’re ready to move on to design. As you work through the design phase, allow yourself the authority to re-prioritize your roadmap. We recommend regular meetings to collectively evaluate upcoming test hypotheses and designs before they go to development.

Formalizing this pre-development review is a valuable way to improve your testing skills and keep the visual design aligned to brand guidelines. But there is a more important outcome of this meeting, which is that a natural micro-prioritization happens simultaneously.

Reviews will occasionally reveal that a design cannot be executed within the testing environment or that additional creative and/or development resources are needed. In those cases, you may find that you either need to simplify a design or altogether deprioritize a concept while you compile needed collateral. This micro-prioritization assures your team maintains momentum. They’ll progress with easier tests while compiling the needed resources for more complex challenges in the meantime. The resulting sprints will contain a healthy mix of testing opportunities with varying levels of ease and impact, and your team will learn a lot in the process.

As you get more sophisticated with experimentation, make sure you’re armed with a great prioritization system like the ADVIS’R Prioritization Framework™. It’s best for teams who are already running experiments, wants to develop a more systematic approach to experimentation and have oversight from a decision-maker who wants transparency into the process.

What’s next for your A/B testing roadmap?

As you build your A/B testing capabilities, don’t let overthinking get in the way of actually launching tests. Eventually you may want to plan your testing roadmap for the clearest results, but in the early days of experimentation done is better than perfect.

Young testing teams often want to increase their A/B testing velocity, but taking the proper approach to measuring the impacts of your tests will help your team grow in expertise. As you launch and close your tests, measuring the impact can be as much fun as finding the A/B testing roadmap and opportunities! Evaluate testing outcomes with a keen eye for iteration and other potential tests.

As you tackle your ideas and your existing roadmap grows shorter, be sure to conduct periodic conversion research to surface new opportunities and keep an open feedback loop with your audience. We’re all about helping new testing teams cultivate a culture of experimentation, so if you’re looking for expert advice on how to build the strength and collaborative skills of your new testing team, reach out to us.

Happy testing!

*A word on prioritization models: Prioritization models come in all shapes and sizes, and there is no one right way. These models facilitate in surfacing the biggest opportunities, overcoming bias, and putting effort in the right place. But the right model for your team depends on factors including who is in the room and what the culture of the organization is. Ease, for instance might be very important to a team of 1, but not as important to a team with a highly experienced A/B test developer.

About the Author

Natalie Thomas

Natalie Thomas is the Director of Digital Experience & UX Strategy at The Good. She works alongside ecommerce and product marketing leaders every day to produce sustainable, long term growth strategies.