How to Run Concurrent A/B Tests without Compromising Results

Determining the appropriate velocity of testing for your team can be a challenge. This Insight identifies why we prefer velocity over testing in isolation, and how to carry out high velocity A/B testing on your own.

Figuring out the right velocity of testing for your team can be a challenge.

You’re always faced with a difficult decision: Should you run multiple tests simultaneously and get quicker results, or isolate each test to get the most accurate outcome from each individual test?

The short answer is, if you’re only running one test at a time on your website, you’re leaving money on the table, and your user experience is suffering.

If you’re lucky, you have a queue of great, data-backed ideas ready to be tested, but identifying how to maintain testing momentum without compromising clean results can be a headache for you and your team.

In this article, we’ll cover:

- Why A/B testing velocity is important

- Why we prefer high-velocity testing over isolation

- How to run multiple A/B tests the right way

Why Is A/B Testing Velocity Important?

A/B testing is one of the most efficient methods for increasing revenue and optimizing the customer experience on your ecommerce site. If you want to get the most out of your testing efforts, you’ll need to run multiple A/B tests at once to get quicker results.

As the Jeff Bezos adage goes, “Our success at Amazon is a function of how many experiments we do per year, per month, per week, per day.” Without velocity, any testing program will be low impact and may not be worth the investment.

The more tests you can perform in a certain amount of time, the more efficient you’ll be in optimizing your site for conversions.

Why We Prefer High-Velocity Testing Over Isolation

High-velocity testing aligns with the way the site actually runs—dynamically, with small, iterative changes over time that all support the long-term key performance indicators (KPIs) and strategies. Customer expectations evolve with the times, so your site should too.

Testing in isolation means missing opportunities to understand how your changes will react to each other over time, sending you down a one-way path with each test’s results, limited by the road set out before them.

Obsessing over changing one detail at a time in isolation could lead to long-term stagnation and a site that just can’t keep up with customer expectations

Customer expectations evolve with the times, so your site should too Share on XNote: You may be wondering if multivariate testing is the best solution to your isolation dilemma. However, if you’re asking about high-velocity vs. isolation testing, you likely don’t have enough site traffic to run effective multivariate testing with any significant velocity due to the traffic required.

How to Run Multiple A/B Tests the Right Way

Test all of the key pages of your site: Most A/B tests running on ecommerce sites are testing one (or all) of the following pages:

When you’re organizing your testing program, make sure you’re not focusing all your ideas on one page of your site. When operating at high velocity, you should be testing several pages at any given time so you’re not neglecting any aspect of the customer experience.

Isolate tests by page: Try thinking about tests in terms of where they touch users in the funnel, isolating page-by-page. Simultaneously testing multiple elements on any one page muddies the waters, while testing on funnel stages will help you understand what’s keeping folks interested in learning more about your product

Note: To test on funnel goals, the conversion rate shouldn’t be the only metric you’re monitoring: although a transaction may be the ultimate goal, a micro-conversion of getting a user to the next stage in the funnel would also be considered a win in this scenario. A few metrics we use to measure the success of a test include:

- Click-through-rate (CTR)

- Revenue per visitor

- Field completions

- Average session duration

- Bounce rate

Stagger your test launches: Track launch dates and stagger them so that if results from one previously running test change drastically with the launch of another, you’re more likely to notice the impact.

Rely on statistical significance instead of time since launch: It makes sense to set time frames for your tests, so that you have a consistent schedule of tests occurring. The problem with this practice is that it can compromise the impact of your results. If you don’t let a test run to the point that it reaches statistical significance, the results may not be a true indicator of the success of a test.

Separate site-wide navigational tests: If you’re conducting an expansive test that impacts the navigational hierarchy of your entire site, consider that a top-of-the-funnel test and isolate navigational tests from each other. Even when operating at high velocity, running numerous site-wide tests at once can compromise clean testing results.

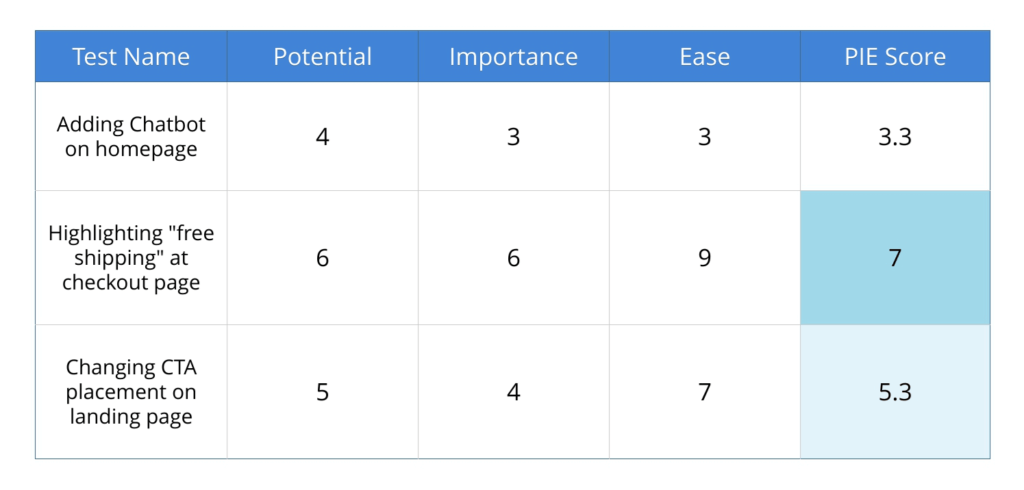

Prioritize your tests: There are a variety of effective options to prioritize tests for your team, one of the most common being the PIE framework.

- The PIE framework is a popular method of prioritization that ranks tests on three criteria: potential, importance, effort. Calculate the average score for each of those criteria to generate the PIE score, which will help you rank and determine the priority level of each test.

- Potential: How much potential for improvement does the page you’re testing have? Take a look at your site analytics and heatmap data to determine which pages have the most (or least) potential for improvement.

- Importance: How would you rank the importance of the page you’re testing? Think of it this way: Users on your FAQ page may not be as valuable as the users on your checkout page.

- Ease: How difficult will it be to implement this test into the page? Are there technical implications that may make your test more difficult to implement than others?

Learn from Your A/B Tests

Determining the appropriate testing velocity for your team is vital to running successful and productive A/B tests. Running too few tests in “perfect” isolation means your experiments will be stagnant and inefficient; run too many at once and it may be difficult to determine the true impact of each test.

Here’s the deal: It’s impossible for us to tell you what the perfect velocity of testing is for your team. It will vary from team to team. Don’t start comparing yourself to other people in your industry; just start generating ideas based off of the data and insight that you have available, and test those ideas at your own pace. Eventually, you’ll find a rhythm that works and run with it.

Remember that there is no perfect testing scenario; just follow the KPIs and the results you get from each test. Let the data guide the way—keeping in mind what you know about your audience—and you’ll be well on your way to designing winning A/B tests.

About the Author

Natalie Thomas

Natalie Thomas is the Director of Digital Experience & UX Strategy at The Good. She works alongside ecommerce and product marketing leaders every day to produce sustainable, long term growth strategies.