What is A/B Testing (+ Five A/B Testing Examples You Can Steal)

Learn more about A/B testing with practical how-tos, examples, and tools.

When it comes to website design, it can be tempting to let your intuition guide the decisions you make. But a pretty site and high-quality products don’t guarantee a sale—no matter how good you feel about them.

Sometimes, an educated guess gets the job done. But when it comes to improving your site (and increasing conversions), wouldn’t it be nice to have concrete information? A/B testing can help you suss out usable data, so you can make effective changes to your site.

Think of it this way. Sure, you can put your house on the market and facilitate the sale yourself, but wouldn’t it be better to work with an experienced real estate agent so that you know you’re getting a great deal?

Going with your gut might result in performance improvements, but A/B testing almost always gets you better results and gives you more confidence in the outcome.

Below, we’ll take a look at:

- What is A/B testing?

- Why do we do A/B testing?

- How do you know if you should do A/B testing?

- 5 A/B testing examples

- A/B testing examples learnings

- A/B testing tools

What is A/B testing?

A/B testing a form of randomized controlled experimentation on the web. It’s a simple (and ideally iterative) way to compare two+ versions of something to find out which one performs better—whether that’s with on-site visitors, your target audience, or your email list.

It allows you to test variations of content, design, or navigation, gather concrete evidence, and make decisions based on real data. You can make immediate improvements, and then repeat the process.

Before we move on: Let’s learn the lingo (it’ll make it easier to understand how A/B testing works).

A/B testing glossary

Here are a few common terms that are used when people discuss A/B testing.

- Control: The version of the site or page that stays the same before and during the test.

- Variant: The altered version(s) of the site or page that reflect the changes that are being tested.

- Conversion: A user taking any desired action, e.g. clicking a contact button or filling a form.

- Conversion rate: Percentage of sessions in which a user converted to specific actions.

- Call to Action (CTA): An instruction to the users to promote a response. Often represented as a clickable button with action-oriented text, e.g. “Add to Cart”

- Optimization: Process of improving elements to increase conversions.

Why do we do A/B testing?

A/B testing provides lots of good results and benefits, so it’s no wonder that it has become a mainstay of conversion optimization. Here are some examples of how A/B testing can help your ecommerce business.

Benefits of A/B testing

Regular and iterative A/B testing can improve your conversion rates quickly and in the long run. Here’s how:

Decreased bounce rate

By creating different versions of the same page (or button, subject line, etc.), you can measure results, make changes, and keep visitors interested —lowering your bounce rate.

Increased engagement

As you move through testing cycles you will be updating your site with “winning” variables that increase clicks, conversions, etc. You move away from variables that increase the number of browsers on your site, and towards the variables that increase the number of engaged customers.

Better content

A/B testing will show you what your customers want to watch, read, or listen to. And the more valuable information you can provide them, the more people will purchase from your brand.

Lower cart and checkout abandonment rates

By testing the variables in your check-out process, you can find the simplest and most effective route for people so they’re more likely to complete their purchase.

More efficient navigation and checkout

Things like confusing navigation and a clunky checkout process don’t go over well with customers. They’re less likely to stay on your site, let alone complete an order. A/B testing allows you to optimize your site for UX.

A/B testing is one of the most surefire ways to make effective changes to your website, app, and marketing materials. But how do you decide when and what to test?

How do you know if you should do A/B testing?

In an ideal world, ecommerce companies would be continuously running A/B tests in order to make data-based decisions rather than intuition-based alone.

In fact, that’s why we designed and launched our Digital Experience Optimization Program™, to help digital leaders understand which optimization practices will help them scale. But we realize that’s not always an option, so here’s how to determine whether or not A/B testing is right for your business at the moment.

When to run an A/B test

While this isn’t an exhaustive list, here are a few instances when A/B testing is imperative to brand recognition, business growth, and keeping churn low.

Changing a feature or service

Anytime you change a feature or a service, you should be A/B testing. Why? Before you invest in rolling out a new feature, you want to make sure it works.

Same is true for anything. If you love fishing wouldn’t you test out a few worms or hooks from a new store and make sure you get some bites before you buy in bulk?

Sluggish (or falling) conversion rate

A dropping conversion rate should cause some concern. When this happens on your site, rule out external influences like seasonality. Then review your marketing efforts to see if you are investing in unqualified traffic.

Once those major factors are ruled out, make a plan to begin A/B testing. Identify the specific goal(s) that isn’t being met and come up with a viable hypothesis.

Remember: Although you use A/B testing to improve your conversion rate, A/B testing doesn’t optimize conversions; it optimizes all the little pieces of the puzzle.

Enjoying this article?

Subscribe to our newsletter, Good Question, to get insights like this sent straight to your inbox every week.

Identify a strong value proposition

For brands that are already collecting user data through surveys, reviews, and customer research, A/B testing messaging on site is a great way to confirm or deny what your customers are telling you via other methods.

For example, if in your research customers are saying they want to know more about the health benefits and environmentalism of your products, A/B test which messaging converts better. This method identifies what value proposition really sells.

When A/B testing might not be worth it

There are instances when A/B testing just doesn’t make sense (and in rare cases, it may be detrimental).

Price changes

With the boom of social influencers and more transparency online, A/B testing your prices can be risky. In essence, you’re offering the same product at different price points to different customers. Sound unethical? It really is.

Plus, A/B testing prices isn’t a smart business decision. Even if the lower price proves more popular in an A/B test, for example, it doesn’t mean that it makes financial sense for the company.

A better idea: Offer different products (or subscription options) at different price points to find out what interests your audience the most.

Your audience is still small

If your business is young, and you’re not seeing a lot of traffic, it’s better to wait on A/B testing. Why? Your sample size is too small, and a low number of users means your test will lack statistical significance.

At this point, A/B testing isn’t very meaningful, and your time would probably be better spent working on brand recognition, user research, growth, etc. As a starting point, make sure you are collecting the right data so that you can begin A/B testing as you grow.

The change is low-risk

Some tweaks that you’ll be making are low-stakes. If the proposed adjustment will likely improve people’s experience and there’s little risk to making the change right away, do it. In these cases, there’s no point wasting time on A/B testing.

A/B testing: a valuable tool

Although there are some situations where A/B testing doesn’t make sense, more often than not running an A/B test will lead to valuable findings.

“Whatever aspect of operations companies care most about—be it sales, repeat usage, click-through rates, or time users spend on a site—they can use online A/B tests to learn how to optimize it,” Ron Kohavi and Stefan Thomke said in the Harvard Business Review.

Now… let’s get to those A/B testing examples.

5 A/B testing examples

Before you start using A/B testing to improve your conversion rate, take a look at how these ecommerce companies used A/B testing to help increase their conversion rates:

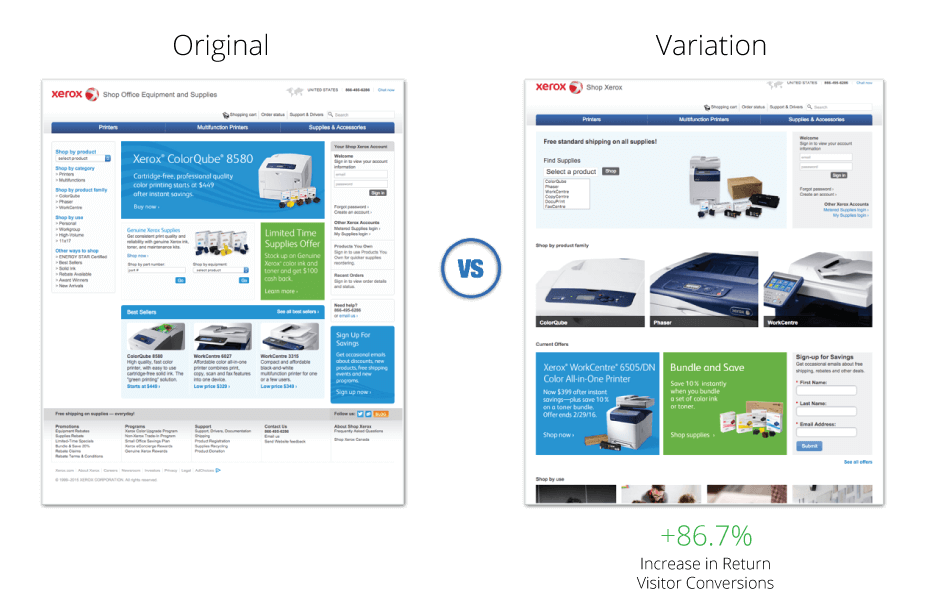

1. Xerox: Home page redesign

Xerox needed a new, more effective homepage, so the team planned for a broad redesign.

Their data showed that returning visitors were 60% more likely to make a purchase than first-time visitors, so the team focused on improving the conversion rate of returning visitors

With redesigned visual elements and more clarity, the home page redesign resulted in an 86.7% growth in conversions for returning visitors.

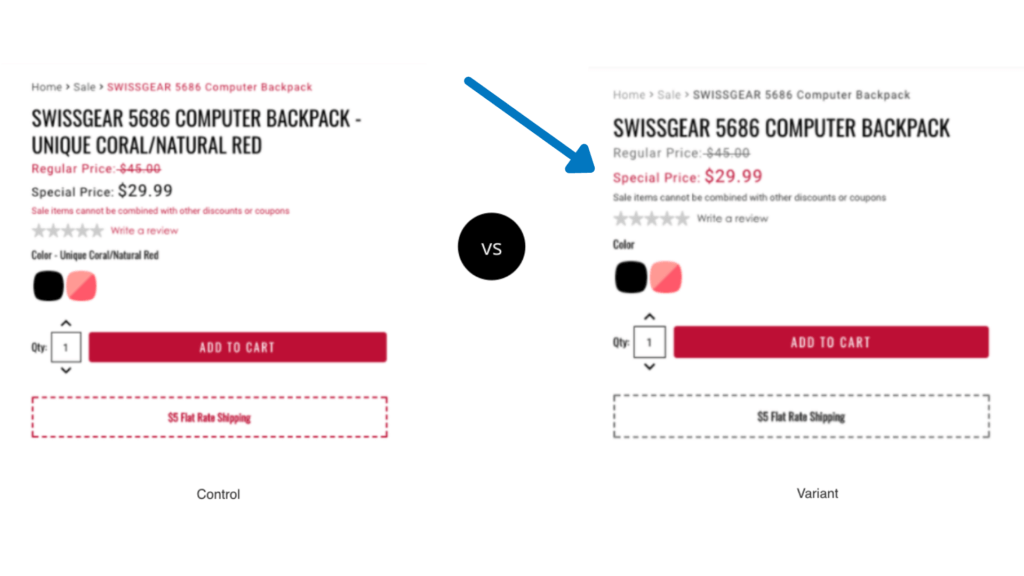

2. Swiss Gear: Improved product pages

Swiss Gear needed a little help with their user experience. The goal was to reduce clutter on product detail pages and improve focus on the product sales price.

By running A/B tests, and acting on the results, Swiss Gear saw a 52% increase in conversions. (During the holiday season, that conversion rate change increased up to 137%.)

Note the changes in how the product name is displayed, where the text is red, and where the text is black. In the variant, the important information is clear and easy to find.

3. SmartWool Socks: Better browsing experience

SmartWool’s category pages were a bit chaotic for visitors. They used an A/B test to re-do their site design.

The baseline design seemed to be a cool idea that didn’t function well. That was tested against the new page with an organized grid layout.

“After testing 25,000 visitors, they discovered that using the grid layout led to a 17.1% increase in average return per visitor.”

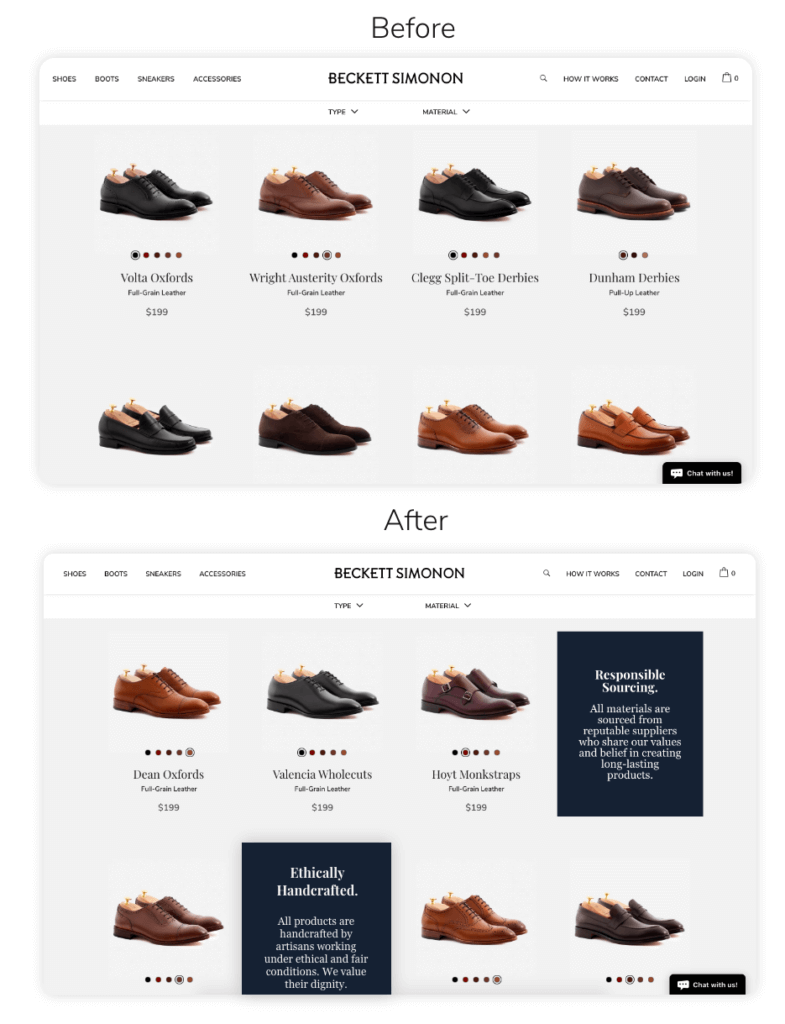

4. Beckett Simonon: Create a story-driven experience

Beckett Simonon’s customers relied on imagery to make their purchase decision, so the team focused on strengthening the site’s visuals.

The hypothesis: Combining messaging about the company’s ethical standards and high-quality images may position the products as a valuable, thoughtful purchase.

Copy that reflected the company’s sustainable business practices was tested against copy that focused on the quality of the products.

The results: The copy that focused on ethical responsibility produced a 5% higher conversion rate than the control (an annualized return on investment of 237%).

5. Step-by-step A/B test: Fictional ecommerce company

Although this video is not based on a real company, it’s an incredibly comprehensive how-to.

It walks through the details of designing an A/B test, running the experiment, and interpreting the results.

A/B testing examples learnings

Now that you’ve been able to see a few A/B testing case studies, what should you take away? And how can you put what you’ve learned into action?

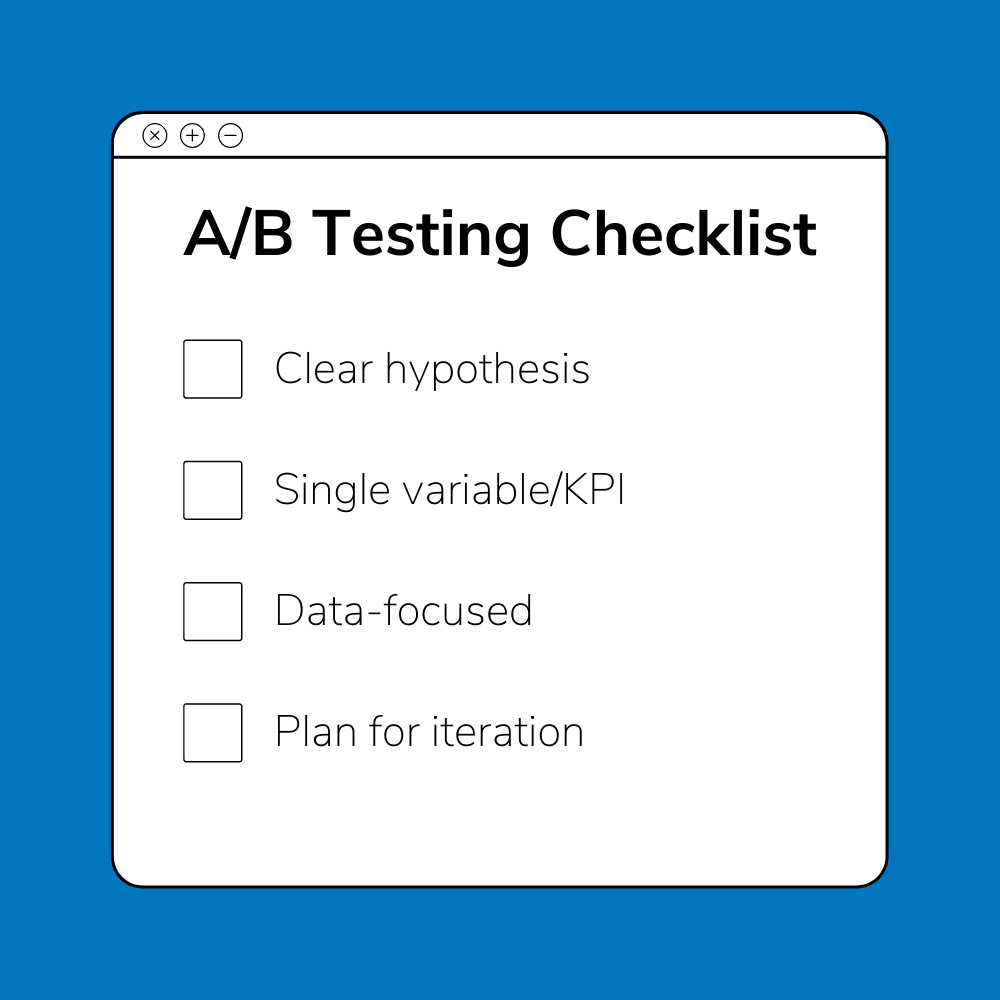

You might have noticed that each one of the A/B testing examples had a clear hypothesis, tested a specific variable, and acted on the data. To run your own successful tests, here are a few tactics to follow when you’re A/B testing:

Create a thoughtful hypothesis that can be clearly proven or disproven

To be more specific: Come up with a well-defined, thoughtful hypothesis. Document what you’ll be testing, why it’s being tested, and what changes you’ll think you’ll see compared to the control.

Here’s an example:

Observation: Low conversion rate.

Research: Lots of fills and dropdowns.

Hypothesis: If we reduce the number of fields, the conversion rate will go up.

Limit your hypothesis to one variable and one KPI at a time

The last thing you want is bad or ambiguous data. Test one variable at a time and measure the change for a single key performance indicator.

Always use a control

Your original version will be your control. It’s your baseline to compare results with. Without a control, you can’t get a true picture of the new version’s performance.

Don’t ignore the data

Sometimes you’re surprised by the results. Something you thought would be a for-sure winner ends up producing inconclusive results or lagging behind your existing design.

Instead of fighting it, accept the results and consider what the next test should be. What feels intuitive to you might not always be the same perspective that your users have…A/B testing helps us remove bias.

Keep testing

You might see a nice lift after one A/B test, but that’s not the time to quit. A/B testing works best when it’s continuous and iterative.

The more you test, the more reliable the data will be.

A/B testing tools

Want to explore A/B testing a bit more? We have a whole article dedicated to usability testing tools.

Begin A/B testing now

Want to maximize the benefits of A/B testing? Think outside the box: A/B test new app features, chatbots, product images, and pricing structures.

And document everything that you test—the information will come in handy next quarter (or next year, etc.) when the market changes, leadership changes, or your products change.

A success in A/B testing doesn’t always mean a conversion increase (although that’s definitely nice and the ultimate goal). Regardless of the test results, A/B testing provides valuable information about improving your site (which eventually helps increase revenue).

If you’re struggling with your conversion rate, abandoned carts, or traffic drop-off and don’t know where to start, you might want to consider the Digital Experience Optimization Program™. We’ll analyze your site, find out why your site isn’t converting, and provide solutions.

About the Author

Jon MacDonald

Jon MacDonald is founder and President of The Good, a digital experience optimization firm that has achieved results for some of the largest companies including Adobe, Nike, Xerox, Verizon, Intel and more. Jon regularly contributes to publications like Entrepreneur and Inc.