MaxDiff Analysis: A Case Study On How to Identify Which Benefits Actually Build Customer Trust

Stop guessing what messages resonate. Let customers tell you what matters most when they're deciding whether to trust your business.

When a SaaS company approached us after noticing friction in their trial-to-paid conversion funnel, they had a specific challenge: their website was generating demo requests, but prospects weren’t converting to customers. User research revealed a trust problem. Potential buyers were saying things like, “I need more proof this will actually work for a company like ours,” and “How do I know this won’t be another failed implementation?”

The company had assembled a list of proof points they could showcase on their homepage: years in business, number of integrations, customer counts, implementation guarantees, security certifications, industry awards, analyst recognition, and more. But they only had space to highlight four of these benefits prominently below their hero section. They faced the classic messaging dilemma: which trust signals would actually move the needle with prospects evaluating B2B software?

This is where MaxDiff analysis becomes valuable. Instead of relying on stakeholder opinions or generic best practices, we could let their target buyers vote with data on what mattered most.

What makes MaxDiff analysis different from other survey methods

MaxDiff analysis (short for Maximum Difference Scaling) is a research methodology that forces trade-offs. Rather than asking people to rate items individually on a scale, MaxDiff presents sets of options and asks participants to identify the most and least important items in each set. This forced-choice format reveals true preferences because people can’t rate everything as “very important.”

Here’s why this matters: traditional rating scales often produce compressed results where everything scores high. When you ask customers, “How important is X on a scale of 1-10?” most people will hover around 7 or 8 for anything remotely relevant. You end up with a spreadsheet full of similar numbers and no clear direction.

MaxDiff cuts through that noise. By repeatedly asking “which of these five options matters most to you, and which matters least?” across different combinations, you build a statistical picture of relative importance. The math behind MaxDiff generates a best-worst score for each item, showing not just which options are preferred, but by how much.

For digital experience optimization, this methodology is particularly useful when you need to prioritize limited real estate on a website, determine which features to build first, or figure out which messaging will actually differentiate your brand.

How we structured the MaxDiff study for maximum insight

In the project for our client, we started by defining the target audience precisely. The company was a B2B SaaS platform serving mid-market operations teams, so we recruited 60 participants who matched their customer profile: director-level or above at companies with 50-500 employees, working in operations or supply chain roles, currently using at least two SaaS tools in their workflow, and actively evaluating solutions within the past six months.

From the initial audit and stakeholder interviews, we identified 11 potential trust signals the company could emphasize on its homepage. These included things like:

- Concrete numbers (customer counts, uptime percentages, integrations available)

- Credentials (security certifications, enterprise clients)

- Promises (implementation timelines, support response times, money-back guarantees)

- And more

Each represented something the company could truthfully claim, but we needed to know which ones would build the most trust with prospects evaluating the platform.

The survey design was straightforward. Each participant saw these 11 benefits randomized into multiple sets of five items. For each set, they selected the most important factor and the least important factor when considering whether to adopt this type of software. Participants completed several rounds of these comparisons, seeing different combinations each time.

This approach gave us enough data points to calculate a robust best-worst score for each benefit: the number of times it was selected as “most important” minus the number of times it was selected as “least important.” Positive scores indicate a strong preference, negative scores indicate a low importance, and the magnitude of the scores shows the strength of feeling.

The results revealed a clear hierarchy of trust signals

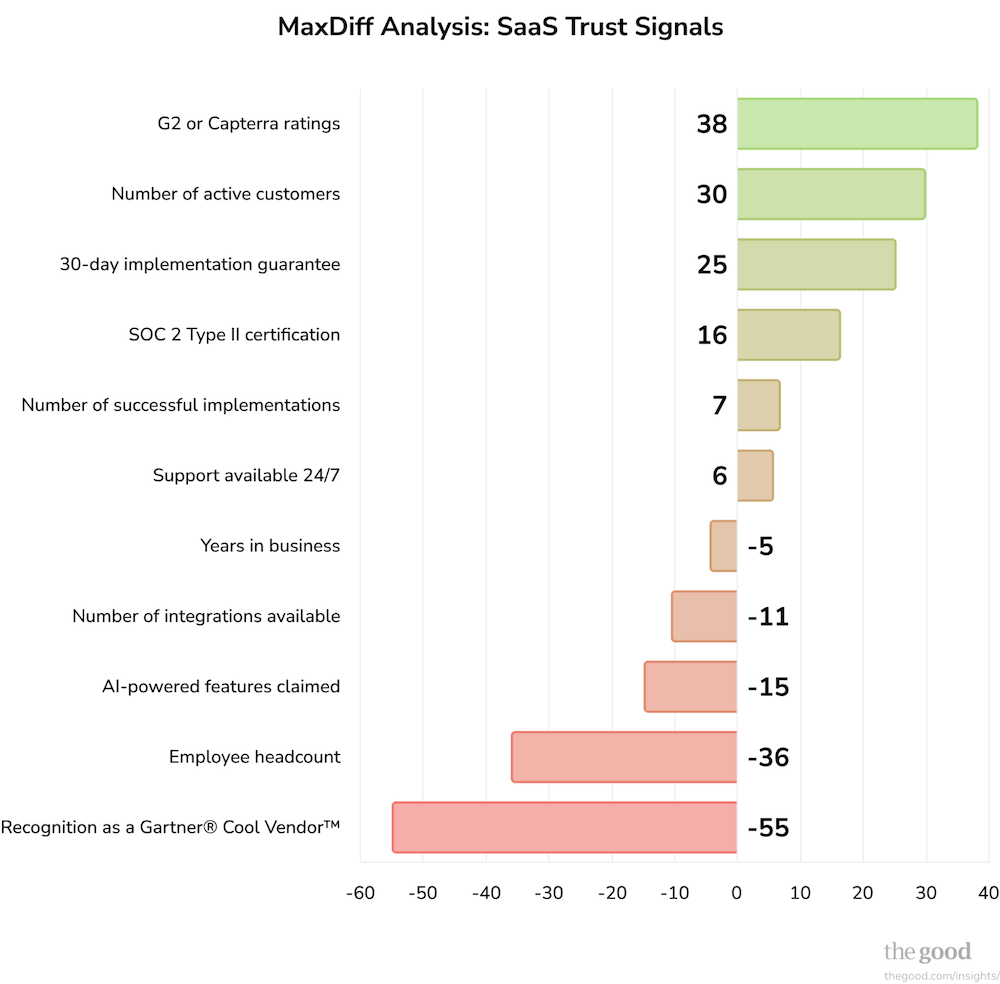

When we analyzed the MaxDiff results, the pattern was striking. The top-scoring benefits shared a common theme: they provided concrete evidence of proven reliability and satisfied users. The bottom-scoring benefits? They emphasized company scale and marketing visibility.

The four highest-scoring trust signals were clear winners. G2 or Capterra ratings scored 38 points (the highest possible), indicating this was nearly universal in its importance. The number of active customers scored 30 points. An implementation guarantee (“live in 30 days or your money back”) scored 25 points. And SOC 2 Type II certification scored 16 points.

These weren’t arbitrary marketing metrics. They were the specific signals that would make someone think, “this platform delivers real value and other companies trust them.”

The middle tier included operational details that registered as minor positives but weren’t decisive: the number of successful implementations (7 points), availability of 24/7 support (6 points). These signals suggested competence but didn’t particularly move the needle on trust.

Then came the surprises. Years in business scored -5 points, indicating it was slightly more often selected as “least important” than “most important.” The number of integrations available scored -11 points. AI-powered features claimed scored -15 points. Employee headcount scored -36 points. And recognition as a Gartner Cool Vendor scored -55 points, the lowest possible score.

Think about what prospects were telling us: “I don’t care that you have 200 employees or that Gartner mentioned you. Show me that real companies like mine trust you and that you’ll actually deliver on your promises.”

Why customers rejected company-focused metrics

The findings revealed an insight into trust-building that extends beyond this single company. B2B buyers weigh social proof and reliability guarantees far more heavily than they weigh indicators of company scale or industry recognition.

When a business talks about its employee headcount or analyst mentions, prospects interpret this as the company talking about itself. These metrics answer the question “How big is your business?” but not “Will this solve my problem?” From the buyer’s perspective, a larger team or Gartner mention doesn’t necessarily correlate with better software or smoother implementation.

By contrast, user reviews and customer counts answer the implicit question every prospect has: “Did this work for companies like mine?” A guarantee directly addresses risk: “What happens if implementation fails?” Security certifications address legitimacy: “Is this platform secure enough for our data?”

The AI-powered features claim scored poorly, likely because it felt trendy rather than practical. Prospects for this specific business weren’t primarily concerned about cutting-edge technology; they wanted a platform that would reliably solve their workflow problems. Leading with an AI angle, while possibly true, didn’t address the core decision-making criteria.

Years in business scored negatively for similar reasons. While longevity can signal stability, in this context, it didn’t address the prospect’s immediate concerns about implementation speed and user adoption. A company could be around for years while providing clunky software with poor support.

From insight to implementation: turning research into revenue

The MaxDiff analysis gave the company a clear action plan. We recommended implementing a four-part trust signal section directly below their homepage hero, featuring the top four scoring benefits in order of importance.

This meant reworking their existing homepage structure. Previously, they had emphasized their implementation guarantee in the hero area while burying customer counts and ratings further down the page. The research showed this approach had it backward. Prospects needed to see evidence of customer satisfaction first, then the implementation guarantee as additional reassurance.

We also recommended removing or de-emphasizing several elements they had been proud of. The employee headcount mention, the Gartner recognition, and several other low-scoring items were either removed entirely or moved to less prominent positions on the site. The goal was to prevent low-value signals from crowding out high-value ones.

The broader lesson here extends beyond this single homepage optimization. The MaxDiff results provided a messaging hierarchy that the company could apply across its entire go-to-market strategy. Email campaigns, landing pages, sales conversations, demo decks, and even their LinkedIn company page could now emphasize the trust signals that actually mattered to prospects.

When MaxDiff analysis makes sense for your business

MaxDiff is particularly valuable when you’re facing a prioritization problem with limited data. It works best in these scenarios:

- You have more options than you can implement. Whether that’s features to build, benefits to highlight, or messages to test, MaxDiff helps you choose wisely when you can’t do everything at once.

- Stakeholder opinions are conflicting. When internal debates about priorities can’t be resolved through argument, customer data settles the question. MaxDiff provides quantitative evidence for decision-making.

- You need to differentiate in a crowded market. If competitors are all saying similar things, MaxDiff reveals which specific claims will break through. Often, the winning messages are ones companies overlook because they seem “obvious” or “not unique enough.”

- You’re optimizing for a specific audience segment. Generic research about “customers in general” often produces generic insights. MaxDiff works best when you recruit participants who precisely match your target customer profile.

The methodology has limitations worth noting. It requires careful setup, and you need to know which options to test before you start.

If you don’t include the right benefits in your initial list, you won’t discover them through MaxDiff.

It also works best with a reasonably sized set of options (typically 5-15 items).

And the results tell you about relative importance, not absolute importance; everything could theoretically matter, but MaxDiff reveals the hierarchy.

How to use MaxDiff findings in your optimization strategy

Once you have MaxDiff results, the application extends beyond simply reordering homepage elements. The insights should inform your entire digital experience.

Your messaging architecture should reflect the importance hierarchy. High-scoring benefits deserve prominent placement, repetition across pages, and detailed explanation. Low-scoring benefits can either be removed or repositioned as supporting rather than leading messages.

Your testing roadmap should prioritize changes based on MaxDiff findings. If customer reviews scored highest in your study, test different ways of showcasing reviews before you test other elements. Let the data guide your experimentation priorities.

Your content strategy should emphasize what customers care about. If service guarantees scored highly, create content that explains the guarantee in detail, shares stories of when it was honored, and addresses common concerns. Build your editorial calendar around the topics MaxDiff revealed as important.

Your sales enablement should align with customer priorities. If the research showed that prospects value licensing credentials, make sure your sales team knows to emphasize this early in conversations. Create collateral that highlights the trust signals that matter most.

The most effective companies use MaxDiff as one tool in a broader research program. They combine it with qualitative research to understand why certain benefits matter, behavioral analytics to see how users interact with different messages, and continuous testing to validate that the predicted preferences translate into actual conversion improvements.

Turning guesswork into growth

The SaaS company we worked with started with a dozen possible messages and no clear sense of which would build trust most effectively with B2B buyers. After the MaxDiff analysis, they had a data-backed hierarchy that let them confidently restructure their homepage and broader messaging strategy.

This is the power of asking prospects the right questions in the right way. Not “do you like this?” which produces inflated scores for everything. Not “rank these 11 items,” which overwhelms participants and produces unreliable data. But rather, through repeated forced choices, revealing the true importance of each element.

If you’re struggling with similar prioritization challenges (too many options, limited space, stakeholder disagreement about what matters), MaxDiff analysis might be the tool that breaks through the noise. It transforms subjective opinion into statistical evidence, letting your prospects vote on what will actually convince them to choose your platform.

Ready to discover which messages actually resonate with your customers? The Good’s Digital Experience Optimization Program™ includes research methodologies like MaxDiff analysis to help you prioritize changes based on real customer preferences, not guesswork.

Enjoying this article?

Subscribe to our newsletter, Good Question, to get insights like this sent straight to your inbox.

About the Author

Sumita Paulson

Sumita is a Strategist at The Good with a decade of experience as a front-end developer. She works to create meaningful digital experiences and solve the everyday problems that make up our interactions with technology.