How to Conduct High-Impact User Testing: Part 2 – Formulating Tasks and Questions

User testing is the best way to know how people are interacting with your website or product. This Insight takes a closer look at how to develop tasks and questions that will supercharge your testing program.

This is part 2 of a 3 part series titled, How to Conduct High Impact User Testing. To start from the beginning, read Part 1: Thoughtful Panel Selection.

Running frequent, iterative, unmoderated user testing can help you uncover pain points and inform your design decisions before committing resources and development time. However, if we’ve learned one thing about user testing, it’s that the user test is only as good as the questions you ask.

Regardless of what testing tools you utilize, it’s the thoughtful responses you get from your user testers that make your investment worthwhile. If you’re finding that the results of your user testing endeavors are not productive, it’s possible that you’re just not asking the right questions. This Insight is focused on how to surface useful and meaningful feedback from user testers.

In this article we’ll cover:

- How to avoid biasing your audience

- Why it’s important to set learning goals

- The importance of a pilot launch

- How to avoid staging unnatural behavior

- The suitable number of tests for your audience

Don’t prime or bias your audience

The first questions we almost always ask when conducting a user test is, “What do you think this website offers? What would you come here for?” Giving users a concrete idea of what the site sells before they have a chance to tell you what they think can mean losing key insights. And we’ve found that asking a question like the one above can surface unexpected results.

ECOS Paints assumed that education was critical to helping users understand why they needed to choose eco-friendly paint, but through user testing we found that the education emphasis gave the illusion that this was a manufacturer’s website. This resulted in a typical 5-10 minutes on the site before users realized they could buy products here.

Aim to put users in the right mindset, but not prime them to know exactly what the site offers. So instead of saying “Imagine you are shopping for environmentally friendly house paint” we would recommend a prompt like, “Imagine you have an upcoming home renovation.” The latter gives the context needed to prompt a thoughtful response, but doesn’t give away the purpose of the site.

Decide ahead of time what questions you are looking to answer

What tasks are you hoping to see users accomplish? Do you want to know how and why they use the menu, where they have difficulty finding a product, or why they may be dropping out during the checkout process? Outlining key pain points that you may have already discovered in your data will help guide you to create tasks that reveal why users behave the way they do on your site.

Do they find the user interface of your site to be intuitive, or frustrating? Asking users to rate their experience as they perform tasks on your site may reveal not only why users choose to purchase from you once, but also why they may return to your site.

Do they find that you are better or comparable to your competitors? Utilizing competitive user testing – asking users to explore your site alongside one or more of your competitors – can reveal where your strengths and weaknesses are as well as why your target audience may prefer to shop with your competitors.

Running competitive testing will help you compare how your audience perceives the competition against your own site, and the data you draw from this will help you identify what’s great about your site, and what needs to be optimized.

Once you’ve screened for the ‘right’ audience, the user test is only as good as the questions you ask. Share on XDon’t risk staging unnatural behaviors

The number one mistake that we see new researchers and strategists make is thinking too narrow-minded with user tests. It may be a goal of yours to know how users react to one particular page on your site, and you may want to test your assumptions, but we often gain more refreshing and impactful test results when we aim for natural behaviors that aren’t influenced by the structure of the test.

Over-structure the tasks in your user test and you risk missing key behavior patterns that are stopping real users in their tracks and causing funnel abandonment.

In our experience, the first few minutes a user spends on the site is the closest to “natural” we will get in the session, so we leave those first few minutes relatively unstructured. For this reason we often ask users early in a test “Explore this website in whatever area interests you. Spend no more than a few minutes on this task.” This produces close-to-natural behavior that will show you what’s grabbing user attention and how they would naturally behave on site. The rest of the tasks should be formulated to help users navigate down the conversion funnel with just enough guidance to stay on-task and deliver pointed insight.

Under-structuring tasks can also lead to unnatural behaviors and over-emphasize the impact of page elements.

User testers who find something annoying can end up dwelling on a single trivial detail for a majority of the test, which is rarely useful. One way to get natural-like behaviors with more pointed tasks is to give a time limit. Since real users are unlikely to browse a site for 30 minutes, giving an estimate of how long users should spend on each task will assure the feedback is useful and not overly specific or critical. Simply adding “Spend no more than a few minutes on this task” to your prompts should do the trick.

Enjoying this article?

Subscribe to our newsletter, Good Question, to get insights like this sent straight to your inbox every week.

Always launch a pilot test

If you’ve done everything right up until this point, then there shouldn’t be much to correct, but there’s always the chance that one of your links didn’t work or there’s a task that just doesn’t make sense to user testers. Launching a single “pilot” test will assure you have all your ducks in a row by uncovering any questions that may be unclear to your testers, or where you can ask them for more feedback. Having this opportunity to edit and refine the test will ensure that you’re set up for success with the rest of your user testing sessions.

Don’t waste resources running too many tests

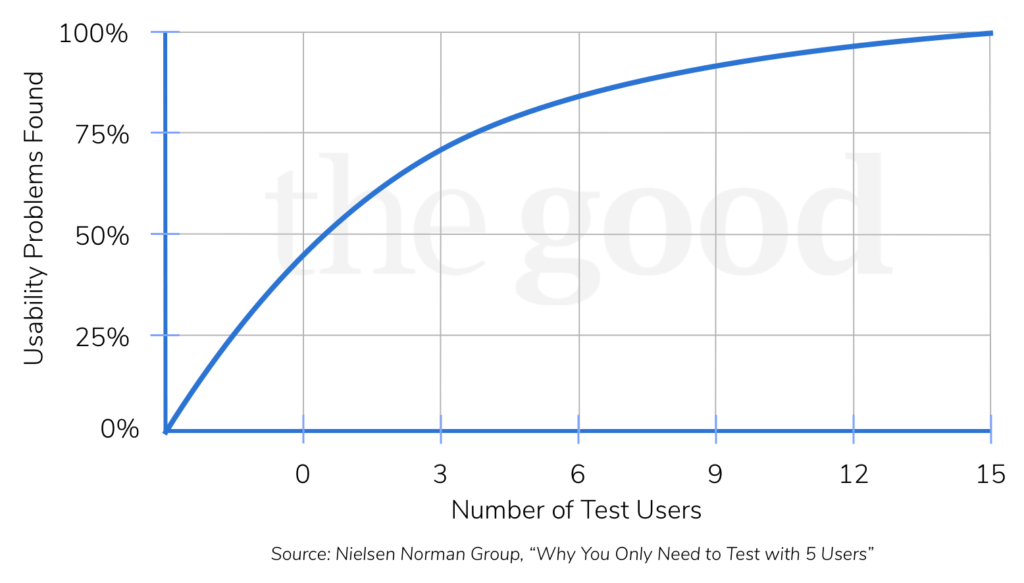

It can be daunting to launch a batch of tests and look at the sheer number of minutes left to annotate and analyze. But we assure you, most people are launching too many tests. Industry colleagues at Nielsen Norman Group have found that roughly 5 users per usability test uncovers about 80% of usability issues, and that after that tests have diminishing returns. (We’ve found that at just 3 users, we start to see patterns between sessions.) By sticking to 5 tests to start, we avoid over-emphasizing small problems and instead uncover more overarching issues: reasons users abandon your site and how they can become brand loyalists.

If 5 sounds like too few tests, try embracing smaller sample sizes, which we believe fosters a culture of experimentation and validation. Start by running a handful of tests early on to uncover major usability issues, followed by small iterative testing cycles to refine your site experience. This approach to testing will result in more accurate results, and will ensure that you’re not wasting resources on futile tests.

The art of formulating user testing questions is one that can be refined and continually improved upon

Even trained experts go back to the basics every time. Learning to remove bias, set learning goals, and avoid staging unnatural behavior will assure that each testing series is high impact. Launching a pilot test, and moving in small, iterative testing cycles means you’re never taking risks by investing precious resources into a potentially faulty test.

Once your tests are compiled and your panels fill, then comes the fun part of analyzing all the hours of testing you’ve collected. Analysis is the final critical part to your user testing program, and is usually what makes up for the time and resources you’ve invested in planning and executing the tests.

Read Next: How to Conduct High Impact User Testing Part 3: Analyzing the Results

About the authors:

Natalie Thomas is the Director of CRO & UX Strategy at The Good. She works alongside ecommerce and lead generation brands every day to produce sustainable, long term growth strategies.

Maggie Paveza is a CRO Strategist at The Good. She has over five years of experience in UX research and Human-Computer Interaction, and acts as expert on the team in the area of user research.