What Others Fail to Teach About A/B and Multivariate Testing

An introductory look at conversion optimization testing methods: Split, A/B, and Multivariate

Do you believe everything you read online?

No?

Then why do you keep getting distracted by those messages promising a revolutionary, sure-fired way to pole vault your conversion rate to the top of your industry?

Don’t worry. You’re not alone. Flavor-of-the-week conversion advice gets more websites stuck in the digital mud than any other distraction we know of – including social media and mirror image bias (thinking the customer looks just like you).

Let’s take a look at a straight-line path to accomplishing your goals – something scientifically proven and confirmed by the most successful companies in the world to be absolutely trustworthy.

After all, the opinions we care about most come from the customer, and the results we care about most are always aimed at well-defined goals. Let the sellers of new ideas keep repackaging and pushing their wares.

We’ll stick with the narrow way. Nothing complicated. Nothing theoretical. Just clear-cut paths to carefully crafted outcomes.

Period.

Let’s take a detailed look at conversion optimization testing methodology: Split, A/B, and Multivariate.

A/B and Multivariate Testing – Defining Terms

First, let’s get at the differences between the primary methods of conversion optimization testing. Then we’ll talk about when and why you should use one or the other.

A/B testing

You have a decision to make. Maybe your current call to action (CTA) copy for the buy button is “Buy Now”, but your conversion rate isn’t where you want it to be, so you wonder whether changing the copy to “Place My Order” would make a difference.

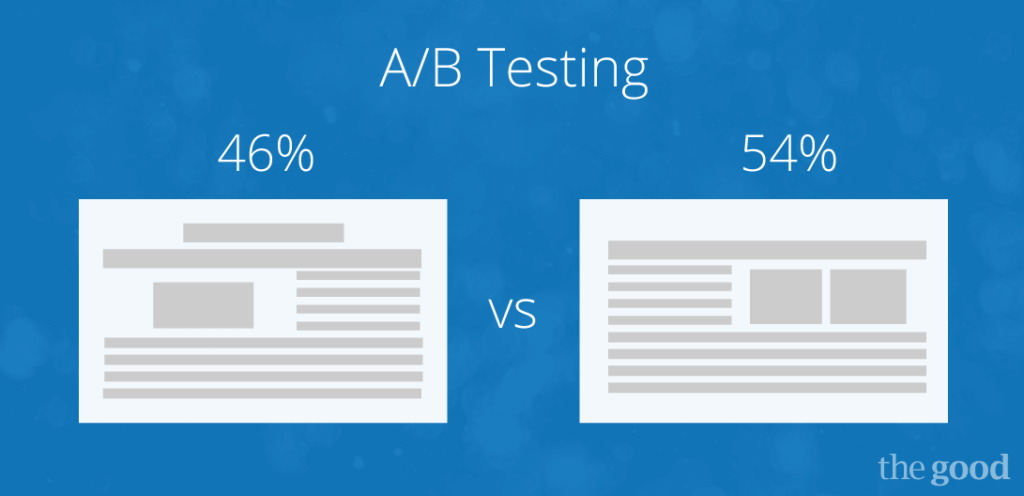

You set up an A/B test to send half your traffic to the Buy Now version and the other half to the Place My Order version. You wait long enough to get a good sample, compare the results, and choose the winner.

That’s the way many, maybe most, marketers use A/B testing.

It’s wrong.

It’s not the most efficient use of your time, nor will it deliver the best results.

A better project for A/B testing would test two radically different site designs. A/B testing can help you quickly narrow down the candidates from a global perspective.

Moreover, A/B testing isn’t limited to two variations. You could put forth five (let’s say) different website layouts or design perspectives and test them all against one another. That’s still A/B testing, since you’re testing one thing (an entire page) against gross revisions of the page.

Key point: Use A/B testing for global changes, not for small changes.

Use A/B testing for global changes, not for small changes. Share on XMultivariate testing

Testing takes time and costs money. The sooner you find better solutions to your current problems, the sooner you can begin benefiting from those solutions.

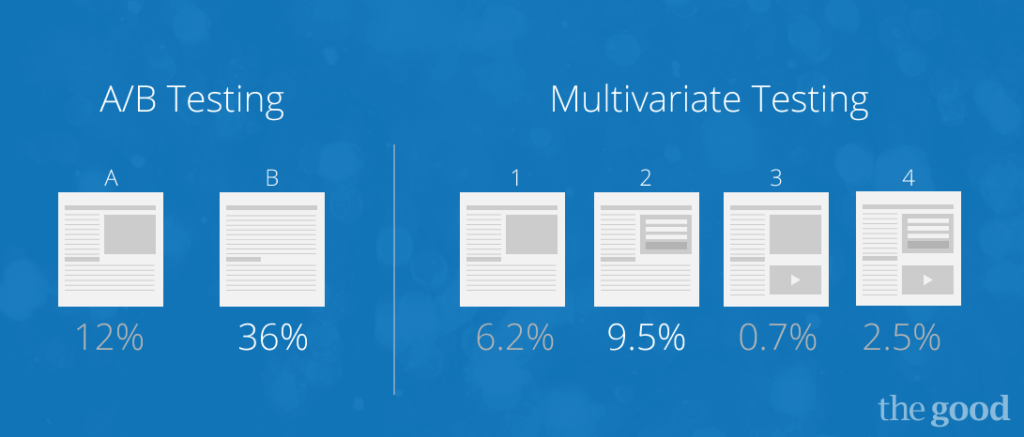

Once A/B testing has determined the overall layout that works best to help move your visitors further down the sales path you’ve established, you‘ll want to switch to multivariate testing (MVT) to get your conversion rate spiking as quickly as possible.

Rather than test only one change at a time, though, with multivariate testing you’ll throw several variables into the mix and test them all at once. That’s why MVT provides a time-to-solutions advantage. It can quickly help you determine the best combination of potential changes.

You may want to change the color of the button and the size of the button, in addition to the CTA copy on the button. That will give you eight variations (2 x 2 x 2) to test. You’ll need to run the MVT longer than the A/B test, but the results will give you considerably more insight.

Once again, you should use A/B testing to compare one page against another. You should use multivariate testing to compare changes to multiple elements on a certain page.

Key point: A/B testing takes care of selecting the big picture. MVT refines the picture you choose.

A/B testing takes care of selecting the big picture. MVT refines the picture you choose. Share on XSplit testing isn’t what it’s often made out to be

Promise us you won’t use this information to start arguments at the water cooler. This is for those who like to be exact. It won’t matter to anyone else. If you introduce the concept to your team at all… be kind, and expect to get challenged.

You’ll often hear A/B and multivariate testing referred to as “split testing.” You’ll read it in articles, hear it from the podium, even see it in white papers.

But split testing is neither A/B nor multivariate. Split testing, in its pure form, is the process of using a different URL for different versions of the test. That’s it.

It’s really “Split URL Testing.”

Split testing can be used in either form of conversion optimization testing, but split testing isn’t either form. Of course, that’s not the prevalent current usage and words do change meaning over time… so few people care.

No argument. But don’t let the potential benefit of split URL testing disappear along with the strictness of the meaning. Along with the technical reasons for using different URLs for each version of your page, there’s another component often overlooked: the URL can affect click-through rate.

For instance, would someone be more likely to click on “yourcompany.com/buynow” or “yourcompany.com/yes”? Would it make a difference at all? You’ll never know until you test. You can use split URL testing to compare URLs.

Resources:

Tools for A/B and Multivariate Tests – Which are Best?

If you plan to run conversion optimization tests, you’ll need special tools and know-how. Even simple A/B tests can get complicated without proper setup and planning.

Here’s a list of options to help get you started:

Google Analytics: It’s the world’s most popular website analytics tool, and it now includes a testing feature, “Content Experiments.” It’s a mashup of the prior tool, “Website Optimizer” and Google Analytics. If you’re a prior user of Google Website Optimizer, be sure to remove those tags before switching over to Content Experiments.

There’s plenty of power available in the Google tool chest. The primary downside is a tendency towards confusion: frequent changes, hard-to-get direct support, and steep learning curves.

Resources:

Visual Website Optimizer: VWO is an easy to use testing tool that allows marketers to create and A/B tests using a point and click editor as well as MVT, heatmaps, usability testing, and more. Plans start at $49/month which make it perfect for small and medium sized businesses. You can also test it out with their 30-day free trial.

Resources:

Optimizely: Probably the most familiar non-Google third-party option for testing, Optimizely is simple to use and comes with easier access to support. Optimizely provides their own training academy for users. This is targeted at enterprise clients so it may be expensive for smaller organizations, but the platform does offer a 30-day free trial or pay-as-you-go plan for tire kickers.

Resources:

Should you try to go it on your own, or should you outsource your testing? The best answer to that question depends primarily on your in-house resources. That said, there can also be value to getting a fresh set of eyes (outside your business) looking at your website.

Setting Up Your Conversion Optimization Testing – Do’s and Don’ts

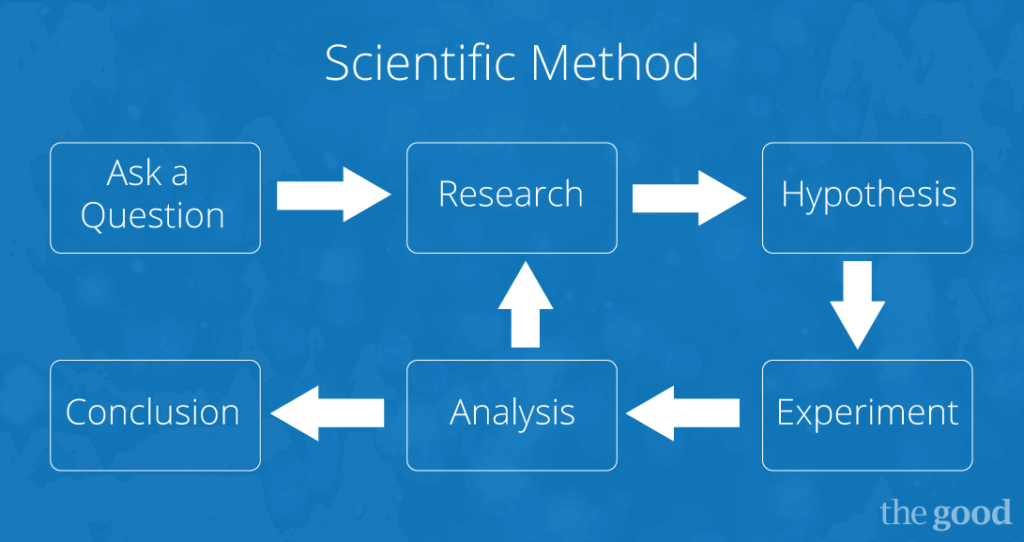

At The Good, we approach conversion optimization testing scientifically. We rely on the data to guide us, rather than trying to guide the data.

The steps of the scientific method are logical, simple, and well-known. In layman’s terms, here are the basics of how you can conduct testing on your ecommerce or lead-generation website:

- Identify a problem you wish to address – something you want to understand

- Use your current knowledge to propose a solution or explanation

- Set up an experiment to test your hypothesis (your best guess)

- Run the test and analyze the results

- Learn from the test, and keep testing until you’re satisfied with the results

Follow that path, and you will learn. Deviate, and you can head down the wrong trail. That will waste time, cost money, and may even lead to disaster.

Here are some of the most pertinent do’s and don’ts for testing:

Identifying the problem: For an ecommerce or lead-generation site, the problem is most always centered on conversion rate. Pay attention to what your visitors do, more than what they say. It’s entirely possible to make changes based on customer feedback and see your conversion rate drop. Always test for results before making your decisions. And always put your prime testing time and finances into changes that potentially have the most effect on your bottom line. Major in the majors.

Proposing a solution: Above all, listen to your customers. The changes you make should focus on what works best for them. Period. It’s almost always better to make things simpler than to make things more complicated. Make the action you want visitors to take absolutely clear.

Setting up the experiment: Refer back to the earlier information about the difference between A/B testing and multivariate testing. If you’re looking at global changes – one page layout versus another, for instance – A/B testing will probably be the way. If you’re trying to tweak for optimization, MVT will probably serve you better. Make sure there are no other changes to the page you’re testing than the elements included in your test. Be deliberate and pay attention.

Running the test: Always make sure you’ve collected enough data to make your results “statistically significant.” Otherwise, you could base your decisions on chance, not on fact. Statistically significant data is reliable. The mathematics of statistics can be daunting, but you can determine the necessary sample size by using A/B test and MVT test calculators (see the resources listed below).

Resources:

Learning from the results: Take it from Nobel-winning physicist Richard Feynman: If the result you expected to see (your proposed solution) disagrees with the result of the experiment, your hypothesis was wrong. End of story. You can keep trying to prove yourself right as long as you wish, but the best strategy is usually to accept the results of the experiment and move on. Beware of executive tendencies to ignore the test results. It takes a certain degree of humility to admit your private opinion doesn’t pan out with reality. Many people find it difficult to yield personal will to scientific testing – no matter how clear the results.

Now Learn From Your AB and Multivariate Tests

So when do A/B and MVT experiments fail to work?

Only when you fail to set the tests up properly or refuse to learn from the data they provide. Today’s digital marketing managers are bombarded with information daily. It’s easy to push it all off your plate and go back to intuitive decision-making.

The only thing wrong with past experience is that it doesn’t always accurately describe the future. Things change. And in the world of online marketing, they can change rapidly. Once you’ve set up a reasonable testing calendar and protocol, you’ll get more comfortable with drawing on test results to help inform your decisions.

If you need help getting going or simply want to talk about your plans with a team that tests daily… contact The Good.

We love this stuff!

About the Author

Jon MacDonald

Jon MacDonald is founder and President of The Good, a digital experience optimization firm that has achieved results for some of the largest companies including Adobe, Nike, Xerox, Verizon, Intel and more. Jon regularly contributes to publications like Entrepreneur and Inc.