The Exact Framework We Used To Build Intent-Based User Clusters That Drive Retention For A Leading SaaS Company

When you understand what users are trying to accomplish, you can personalize experiences that keep them engaged longer and turn them into power users.

Most SaaS companies segment users the wrong way. They group people by demographics, company size, or subscription tier. Basically, they look at who users are rather than what they’re trying to do.

The problem is that a freelance consultant and an enterprise project manager might both use your collaboration tool, but they have completely different goals, workflows, and definitions of success.

We recently worked with a leading enterprise SaaS company facing exactly this challenge. Their product served everyone from solo creators to enterprise teams, but the experience treated everyone the same.

Users logging in to track a single client project got bombarded with team collaboration features. Power users managing complex workflows couldn’t find advanced capabilities buried in generic menus.

Users felt overwhelmed by irrelevant features, engagement plateaued, and retention suffered.

The solution wasn’t another traditional segmentation model. It was intent-based segmentation; a framework for grouping users by what they’re actually trying to accomplish, then personalizing the experience to match those specific goals.

Understanding behavioral segmentation and where intent fits

Before diving into how to build intent-based clusters, it helps to understand where this approach fits within the broader landscape of user segmentation.

Most SaaS companies are familiar with demographic segmentation (company size, industry, role) and firmographic segmentation (ARR, team size, geographic location). These approaches tell you who your users are, but they don’t tell you what they’re trying to do, which is why they often fail to predict engagement or retention.

Behavioral segmentation takes a different approach. Instead of looking at static attributes, it focuses on how users actually interact with your product.

Behavioral segmentation divides users based on engagement. This includes actions like feature usage patterns, open frequency, purchase behavior, and time-to-value metrics.

While not the only way to do things, behavioral segmentation is widely regarded as more effective than demographic segmentation alone, with plenty of research backing it up.

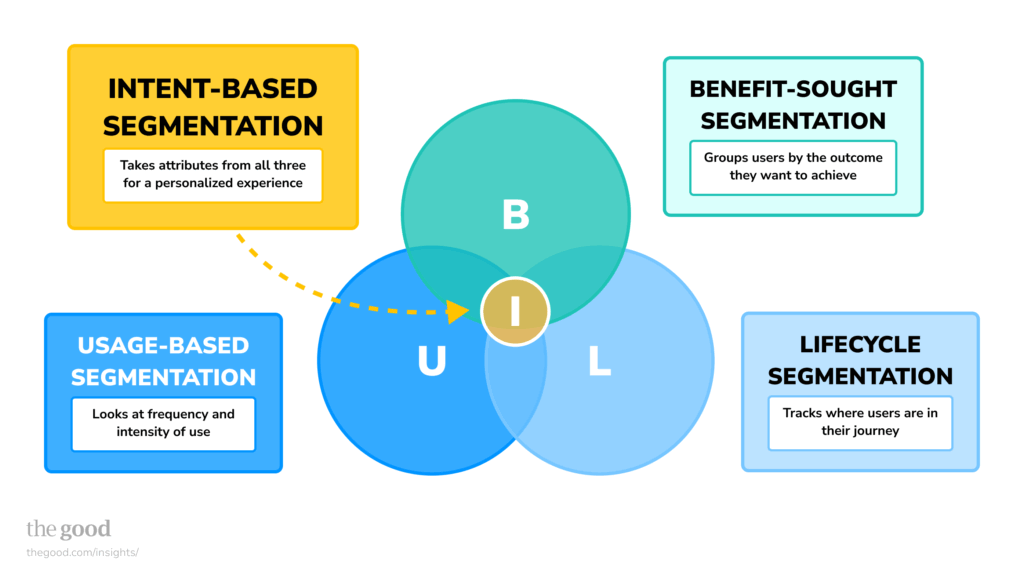

Within behavioral segmentation, there are several approaches:

- Usage-based segmentation looks at frequency and intensity of use.

- Lifecycle segmentation tracks where users are in their journey.

- Benefit-sought segmentation groups users by the outcomes they want to achieve.

Intent-based segmentation sits at the intersection of all three.

It identifies clusters of users who share similar goals and workflows, then maps those patterns to create a more personalized experience.

Intent-based clusters answer the question: “What is this user trying to accomplish right now?”

In a recent client engagement that inspired this article, this distinction mattered. They had mountains of usage data showing which features people clicked, but no framework for understanding why certain feature combinations existed or what job users were trying to complete.

They knew “Business Professionals” used their tool, but that category was so broad it offered no actionable insights. A marketing manager building campaign timelines has completely different needs than a legal team tracking contract approvals, even though both might be classified as “business professionals.”

Intent-based clustering gave them that missing layer of insight.

Case study: How to spot the need for intent-based segmentation

Let’s talk more about the client engagement I mentioned. This is a great case study for when to use intent-based segmentation.

We work on a quarterly retainer for these clients with our on-demand growth research services. So, when they mentioned struggling with how to personalize experiences and improve retention, we opened up a research project that same day.

The team could see that certain users logged in daily and used five or more features. Great, right? Not really. When we dug deeper, we discovered something critical. Heavy feature usage didn’t predict retention. Some power users churned while casual users stuck around for years.

The issue wasn’t the quantity of features used; it was whether those features aligned with what users were trying to accomplish. A user coming in weekly to update a single client dashboard showed higher retention than someone exploring ten features that didn’t match their core workflow.

The symptoms: What teams told us was broken

During our stakeholder interviews, we heard the same frustrations across departments:

From product: “We know the top five features everyone uses, but that doesn’t help us understand why they’re using them or what to build next. Two users might both use our template feature, but one is building client proposals while the other is standardizing internal processes. They need completely different things from that feature.”

From marketing: “Our segments are too broad to be useful. ‘Business Professional’ could mean anyone from a solo consultant to an enterprise VP. When we send educational content, we can’t make it relevant because we don’t know what problem they’re trying to solve.”

From customer success: “We can see when someone is at risk of churning because their usage drops off, but we can’t predict it before it happens. By the time we notice, they’ve already decided the product isn’t right for them. We need to understand intent earlier so we can intervene proactively.”

From UX research: “Users think in terms of tasks, not features. They don’t say ‘I want to use the dependency mapping tool.’ They say, ‘I need to make sure the design team finishes before development starts.’ But our product talks about features, not outcomes.”

The underlying problem: Missing the ‘why’

What became clear was that the organization had plenty of data about behavior but no framework for understanding intent. They could answer questions like:

- How many people use feature X?

- What’s the average session duration?

- Which users log in most frequently?

But they couldn’t answer the questions that actually mattered:

- What are users trying to accomplish when they use feature X?

- Why do some users stick around while others churn?

- What combination of goals and workflows predicts long-term retention?

This may sound familiar. They have data about behavior but lack context about intent. Without understanding the users’ different definitions of success, they use generic personalization that recommends “similar features” and misses the mark entirely.

The four-phase framework for creating intent-based user clusters

Based on our work with the enterprise SaaS client, we developed a systematic framework for building intent-based clusters from scratch.

The process has four distinct phases, each building on the previous one.

Think of this as a directional guide rather than a rigid formula. You can adapt the scope based on your resources and organizational complexity.

Enjoying this article?

Subscribe to our newsletter, Good Question, to get insights like this sent straight to your inbox every week.

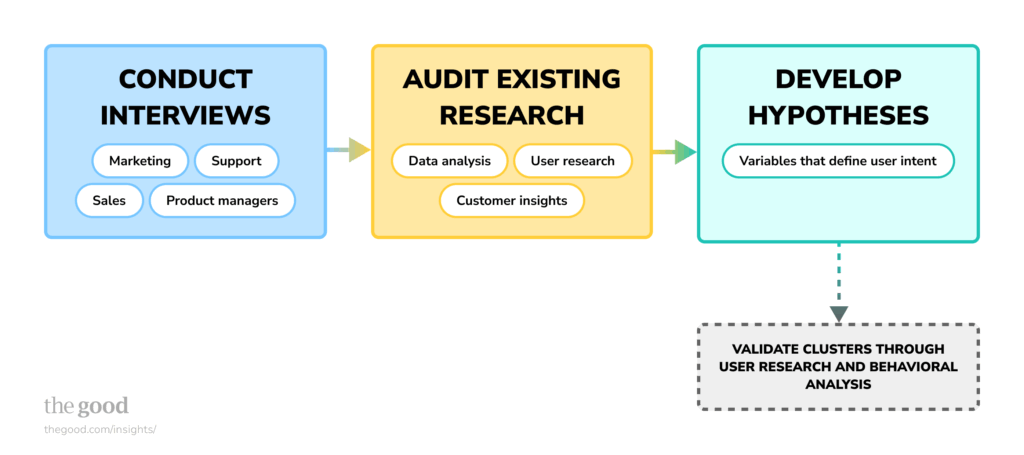

Phase 1: Capture institutional knowledge and identify gaps

Most organizations have more customer insight than they realize; it’s just scattered across teams, buried in old reports, and locked in individual team members’ heads. The first phase consolidates that knowledge and identifies what’s missing.

1. Conduct cross-functional interviews

Start by interviewing stakeholders who interact with users regularly: product managers, customer success, sales, support, and marketing. For our client, we conducted interviews with seven team members from UX research, growth, engagement, marketing, and analytics.

The goal isn’t consensus. You want to uncover patterns and contradictions instead. Focus your conversations on these questions:

- How do you currently describe different user types?

- What patterns have you noticed in how different users engage with the product?

- What data exists about user behavior that isn’t being used to inform decisions?

- Where do personalization efforts break down today?

- What questions about users keep you up at night?

These conversations surface institutional knowledge that never makes it into documentation.

Capture everything. The contradictions are especially valuable—they reveal where teams operate with different mental models of the same users.

2. Audit existing research and reports

Next, gather relevant user research, data analysis, and customer insight. For our client, we analyzed eight existing reports, including retention data, cancellation surveys, user studies, engagement patterns, and conversion analysis.

Look for:

- Marketing segmentation models (usually demographic-heavy)

- User research studies (often small sample, rich insights)

- Behavioral analytics reports (feature usage patterns, cohort analysis)

- Customer journey maps (theoretical vs. actual paths)

- Support ticket analysis (pain points and use cases)

- Cancellation surveys (why users leave)

Pay attention to gaps between how different teams think about users. Marketing might segment by company size, while product segments by feature usage. Neither is wrong, but the lack of a unified framework means teams optimize for different definitions of success.

3. Define hypotheses about intent-based variables

Based on interviews and research, develop hypotheses about what variables might define user intent. This is where you move from “who uses our product” to “what are they trying to accomplish.”

For our client, we identified several dimensions that seemed to correlate with intent:

- Primary workflow type: Are users managing team projects, client deliverables, or personal tasks?

- Collaboration patterns: Solo work, small team coordination, or cross-functional orchestration?

- Usage frequency: Daily operational tool or periodic project management?

- Success metrics: Speed (quick task completion) vs. thoroughness (detailed planning and tracking)?

- Document complexity: Simple task lists or multi-layered project hierarchies?

The goal is to create testable hypotheses that can be validated in Phase 2.

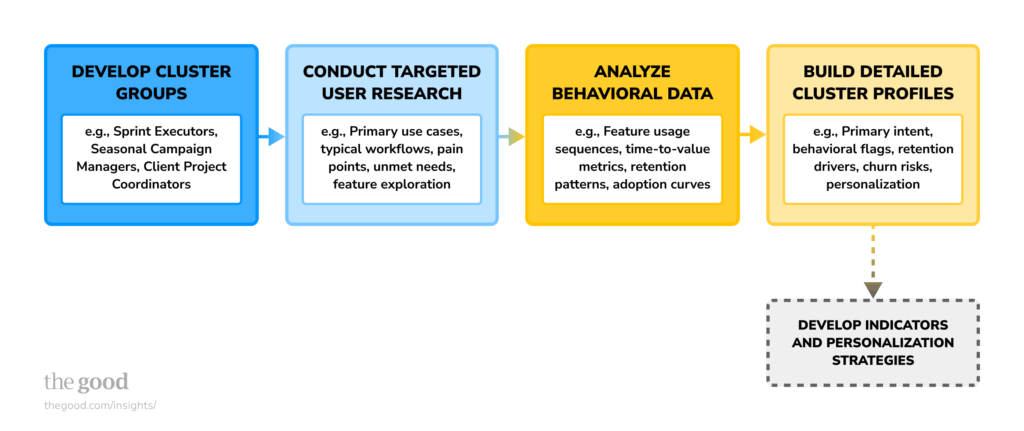

Phase 2: Validate clusters through user research and behavioral analysis

Once you have initial hypotheses, Phase 2 tests them against real user behavior and feedback. This is where hunches become data-backed insights.

1. Develop provisional cluster groups

Transform your hypotheses into provisional clusters. For our client, we identified six distinct intent-based clusters. Let me illustrate with a fictional example of how this might work for a project management SaaS tool:

Sprint Executors: Users focused on rapid task completion and daily standup workflows. They need speed, simple task updates, and quick team coordination. Think startup teams moving fast with lightweight processes.

Client Project Coordinators: Users managing multiple client engagements simultaneously with strict deliverable timelines. They need client visibility controls, progress tracking, and professional reporting. Think agencies and consultancies.

Cross-Functional Orchestrators: Users coordinating complex projects across departments with dependencies and approval workflows. They need Gantt views, resource allocation, and stakeholder communication tools. Think enterprise program managers.

Personal Productivity Optimizers: Users treat the tool as their second brain for personal task management and goal tracking. They need customization, recurring tasks, and minimal collaboration features. Think solopreneurs and executives.

Seasonal Campaign Managers: Users with predictable high-intensity periods followed by dormancy. They need templates, bulk operations, and the ability to archive/reactivate projects easily. Think retail operations teams or event planners.

Mobile-First Coordinators: Users who primarily access the tool from mobile devices for field work or on-the-go updates. They need streamlined mobile experiences and offline sync. Think field service teams or traveling consultants.

Each cluster gets a descriptive name that captures the user’s primary intent, not just their behavior. “Sprint Executor” tells you more about what someone is trying to do than “high-frequency user.”

2. Conduct targeted user research

With provisional clusters defined, recruit users who fit each profile and conduct interviews to understand:

- Their primary use cases and goals when they first adopted the tool

- How they discovered and currently use the product

- Their typical workflows from start to finish

- What defines success in their role

- Pain points and unmet needs

- How they decide which features to explore

- What would make them cancel vs. what keeps them subscribed

For our client, we conducted three to four interviews per cluster, totaling around 24 user conversations. This gave us enough coverage to validate patterns without drowning in data.

The insights were eye-opening. We discovered that one cluster had the fastest time-to-value but the lowest feature adoption. They found what they needed immediately and never explored further. Another cluster showed the highest retention but needed the longest onboarding. They invested time up front because the tool was critical to their workflow.

3. Analyze behavioral data to confirm patterns

User interviews reveal what people say they do. Behavioral data shows what they actually do. Cross-reference your clusters against:

- Feature usage sequences (which tools appear together in sessions)

- Time-to-value metrics by cluster (how quickly do they get their first win)

- Retention and churn patterns (which clusters stick around)

- Upgrade and expansion behavior (which clusters grow their usage)

- Support ticket themes (which clusters need help with what)

- Feature adoption curves (how exploration patterns differ)

For our client, the data revealed critical differences. The “Sprint Executor” equivalent had fast initial adoption but plateaued quickly. They found their core workflow and stopped exploring.

The “Cross-Functional Orchestrator” cluster showed slow initial adoption but deep engagement over time. They needed to learn the tool thoroughly to unlock value.

These patterns weren’t visible in aggregate data. Only by segmenting users by intent could we see that different clusters had fundamentally different paths to retention.

4. Build detailed cluster profiles

For each validated cluster, create a comprehensive profile that becomes the foundation for personalization. For example:

Cluster name: Sprint Executors

Primary intent: Complete daily tasks quickly with minimal friction and maximum team visibility

Most-used features:

- Quick-add task creation

- Board view for visual sprint planning

- Mobile app for on-the-go updates

- Real-time team activity feed

Typical workflow patterns:

- Morning standup with task assignments

- Throughout the day: quick updates and status changes

- End of day: marking tasks complete and planning tomorrow

Behavioral flags that identify this cluster:

- Creates 5+ tasks in first week

- Returns daily within first 14 days

- Uses mobile app within first 7 days

- Rarely uses advanced features like Gantt charts or dependencies

Retention drivers:

- Speed of task completion

- Team visibility and accountability

- Mobile accessibility

Churn risks:

- Tool feels too complex for simple needs

- Feature bloat is making core actions harder to find

- Forced upgrades to access speed-focused features

Personalization opportunities:

- Streamlined onboarding focused on quick task creation

- Mobile-first feature discovery

- Templates for common sprint workflows

- Integrations with communication tools

These profiles become the single source of truth that product, marketing, and customer success can all reference.

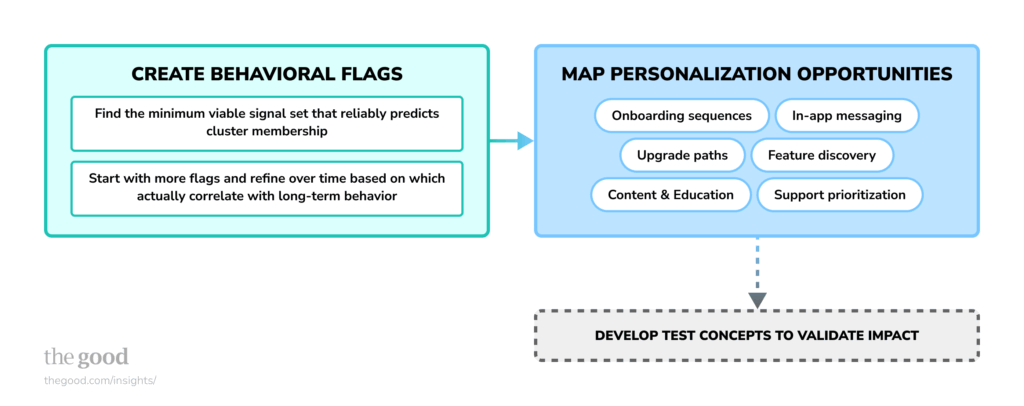

Phase 3: Develop indicators and personalization strategies

The final phase connects clusters to action. This is where the framework moves from insight to implementation.

1. Create behavioral flags for cluster identification

Most users won’t self-identify their intent at signup. You need to infer cluster membership from behavioral signals early in their journey. The key is identifying flags that appear within the first 7-14 days. It should be early enough to personalize the experience before users decide if the tool is right for them.

For reference, the “Sprint Executor” cluster in our fictional example:

- Created 5+ tasks in first week

- Logged in on 4+ separate days in first 14 days

- Used mobile app within first 7 days

- Board or list view used more than timeline/Gantt view (80%+ of sessions)

- Invited at least one team member within first 10 days

- Never explored advanced dependency features

- Average session length under 10 minutes

Versus the “Client Project Coordinator” cluster:

- Created 3+ separate projects within first week (indicating multiple clients)

- Used folder or workspace organization features within first 5 days

- Set up client-specific permissions or external sharing settings

- Created custom views or reports within first 14 days

- Longer average session times (20+ minutes per session)

- Uses professional or client-specific terminology in project names

- High usage of export or presentation features

The goal is to find the minimum viable signal set that reliably predicts cluster membership. Start with more flags and refine over time based on which actually correlate with long-term behavior.

One critical finding from our client work: early behavioral flags predicted retention better than demographic data.

A user who exhibited “Client Project Coordinator” behaviors in week one showed 40% higher 90-day retention than the average user, regardless of their company size or job title.

2. Map personalization opportunities to each cluster

With clusters and flags defined, identify specific ways to personalize the experience across the user journey:

Onboarding sequences: Tailor the first-run experience to highlight features that match user intent. Show Sprint Executors how to set up their first sprint board, not the full feature catalog with Gantt charts and resource allocation tools they don’t need.

In-app messaging: Trigger contextual tips based on usage patterns. When a Client Project Coordinator creates their third project with similar structure, suggest project templates to save time.

Feature discovery: Recommend next-step features that align with cluster workflows. For Sprint Executors who’ve mastered basic task management, introduce the mobile app and integrations with their communication tools—not complex dependency mapping.

Content and education: Send targeted educational content that addresses cluster-specific goals. Client Project Coordinators get tips on professional reporting and client permissions. Sprint Executors get productivity hacks and team coordination strategies.

Upgrade paths: Present pricing tiers and feature upgrades that match cluster needs. Don’t push team collaboration features to Personal Productivity Optimizers who work solo and won’t use them.

Support prioritization: Route support tickets differently based on cluster. Client Project Coordinators might get priority support since they’re often managing paying clients. Seasonal Campaign Managers might get proactive check-ins before predicted busy periods.

For our client, this mapping revealed opportunities they’d completely missed. One cluster had been receiving generic “explore more features” emails when what they actually needed was advanced security capabilities for compliance requirements. Another cluster kept churning at the end of trial because onboarding emphasized features they’d never use instead of the speed-focused tools that matched their workflow.

Phase 4: Develop test concepts to validate impact

Turn personalization opportunities into testable hypotheses. Don’t roll everything out at once. Start with high-impact, low-effort tests that prove the value of intent-based segmentation.

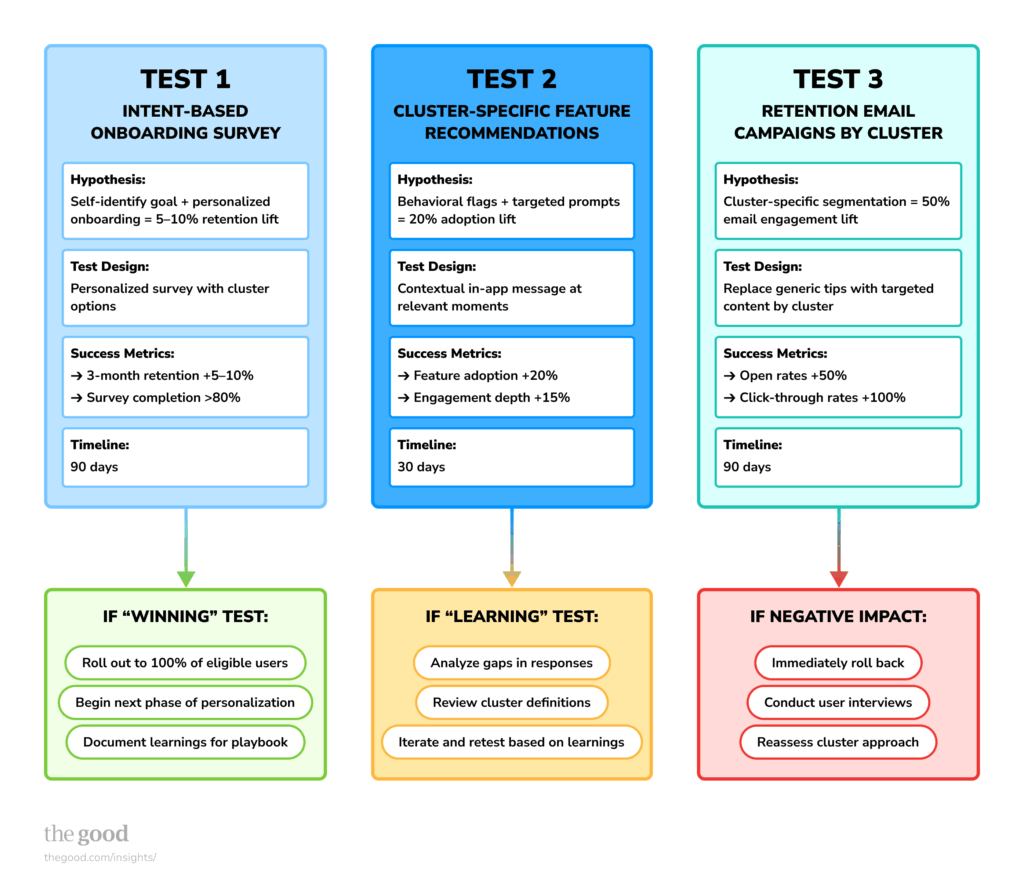

For our client, we proposed several test concepts structured to validate clusters quickly and build organizational confidence in the framework. Here are a few examples.

Example Test 1: Intent-Based Onboarding Survey

Background: The organization lacked a way to identify user intent at the critical moment when users were most open to guidance: right after signup, but before they’d formed opinions about product fit.

Hypothesis: By asking users to self-identify their primary goal during their first meaningful session, we can segment them into actionable clusters that enable personalized feature discovery and messaging, resulting in improved 3-month retention rates by 5-10%.

Test design: During the first session (after initial signup but before deep engagement), present a brief survey asking: “What brings you here today?” with options that map directly to identified clusters:

☐ Coordinate my team’s daily work (Sprint Executors)

☐ Manage multiple client projects (Client Project Coordinators)

☐ Organize complex cross-functional initiatives (Cross-Functional Orchestrators)

☐ Track my personal tasks and goals (Personal Productivity Optimizers)

☐ Plan seasonal campaigns or events (Seasonal Campaign Managers)

☐ Update projects while on the go (Mobile-First Coordinators)

☐ Something else (with optional text field)

Then immediately personalize their first experience based on their response: Sprint Executors see a streamlined task creation tutorial, Client Project Coordinators get guidance on setting up client workspaces, etc.

Success metrics:

- Primary: 3-month retention rate by selected cluster (looking for 5-10% lift)

- Secondary: Survey completion rate (target: >80%), feature adoption aligned with cluster (target: 20% lift), time to first value-generating action

- Guardrails: No negative impact on day 2 or day 7 retention

Acceptance criteria for “winning test”:

- Survey completion rate >80%

- 60% of users select a pre-set option (vs. “something else”)

- Statistically significant retention lift in at least one cluster

- No degradation in key engagement metrics

Acceptance criteria for “learning test”:

- 40% of users select “something else” (suggests clusters don’t match user mental models)

- No statistically significant difference in retention (suggests clusters exist, but personalization approach needs refinement)

Audience: New paid subscribers on first day, trial users converting to paid, reactivated users returning after 30+ days dormant. Start with 25% of eligible users to minimize risk.

Timeline: 90 days to measure primary retention metric, but early signals (completion rate, feature adoption) available within 14 days.

Example Test 2: Cluster-Specific Feature Recommendations

Background: Generic in-app messaging had low click-through rates (<5%) and wasn’t driving feature adoption. Users felt bombarded by irrelevant suggestions.

Hypothesis: For users who match behavioral flags within the first 14 days, triggering personalized feature recommendations will increase feature adoption by 20% and engagement depth by 15%.

Test design: Identify users by behavioral flags, then trigger targeted in-app messages at contextually relevant moments:

- Sprint Executors see mobile app download prompt after completing 5 tasks on desktop: “Update tasks on the go: get the mobile app”

- Client Project Coordinators see reporting features after creating third project: “Impress clients with professional progress reports”

- Cross-Functional Orchestrators see dependency mapping after creating complex project: “Map dependencies to keep cross-functional teams aligned”

Success metrics:

- Primary: Feature adoption rate for recommended features (target: 20% lift vs. control)

- Secondary: Overall engagement depth (features used per session), message click-through rate

- Guardrails: No increase in feature abandonment (starting but not completing flows)

Audience: Users who match cluster behavioral flags within first 14 days. Test one cluster at a time to isolate impact.

Timeline: 30 days to measure feature adoption impact.

Example Test 3: Retention Email Campaigns by Cluster

Background: Generic “tips and tricks” email campaigns had 8% open rates and weren’t moving retention metrics. Content felt irrelevant to most recipients.

Hypothesis: Segmenting email campaigns by identified cluster will improve email engagement by 50% and show a measurable correlation with retention.

Test design: Replace generic weekly tips emails with cluster-specific content:

- Sprint Executors: “5 ways to speed up your daily standup” / “Mobile shortcuts that save 2 hours per week”

- Client Project Coordinators: “How to impress clients with professional project reports” / “3 ways to give clients visibility without overwhelming them”

- Personal Productivity Optimizers: “Build your second brain: advanced filtering techniques” / “Automate your recurring tasks in 5 minutes”

Send to users identified through either the onboarding survey or behavioral flags. Track engagement and retention by cluster.

Success metrics:

- Primary: Email open rates (target: 50% lift), click-through rates (target: 100% lift)

- Secondary: Correlation between email engagement and 90-day retention

- Guardrails: Unsubscribe rates remain stable or decrease

Audience: Users identified as belonging to specific clusters either through survey responses or behavioral flags, minimum 14 days after signup.

Timeline: 6 weeks for initial engagement metrics, 90 days for retention correlation.

Post-test analysis framework

For each test, we established a clear decision framework:

If “winning test”:

- Roll out to 100% of eligible users

- Begin development on next phase of personalization for that cluster

- Use learnings to inform tests for other clusters

- Document what worked to build organizational playbook

If “learning test”:

- Analyze all “something else” responses for missing clusters or unclear framing

- Review behavioral data to see if clusters exist, but personalization was wrong

- Iterate on messaging, timing, or format

- Decide whether to retest with refinements or try different approach

If negative impact:

- Immediately roll back to the control experience

- Conduct user interviews to understand what went wrong

- Reassess cluster definitions or personalization approach

- Consider whether the cluster exists but needs a different treatment

The key to successful testing is starting small, measuring rigorously, and being willing to learn from failures. Not every cluster will respond to every type of personalization, and that’s valuable information. The goal isn’t perfect personalization immediately; it’s continuous improvement based on what actually moves metrics.

Intent-based segmentation mistakes and how to avoid them

Based on our experience implementing this framework, here are the mistakes that will derail your efforts:

1. Starting with too many clusters

More isn’t better. Six well-defined clusters are more useful than fifteen overlapping ones. You need enough clusters to capture meaningfully different intents, but few enough that teams can actually remember and act on them. Start with 4-6 clusters and refine over time. If you find yourself creating clusters that differ only slightly, you’ve gone too granular.

2. Confusing demographics with intent

Job title, company size, or industry might correlate with intent, but they don’t define it. We’ve seen solo consultants behave like “Cross-Functional Orchestrators” and enterprise teams behave like “Sprint Executors.” Focus on what users are trying to accomplish, not who they are on paper.

3. Creating overlapping clusters

Each cluster should be distinct in its primary intent and workflow patterns. If you’re struggling to articulate how two clusters differ behaviorally, they’re probably the same cluster with different labels. Test this by asking: “If I saw someone’s usage data, could I confidently assign them to one cluster?”

4. Ignoring edge cases entirely

Some users will span multiple clusters or switch between them based on context. That’s fine. The framework should accommodate primary intent while recognizing that users are complex. A user might primarily be a “Client Project Coordinator” but occasionally use “Personal Productivity Optimizer” features for their own task management. Don’t force rigid categorization.

5. Skipping the validation step

Your initial hypotheses will be wrong in places. User research and behavioral data keep you honest and prevent confirmation bias. We’ve seen teams fall in love with theoretically elegant clusters that don’t actually exist in their user base, or miss entire segments because they didn’t fit the initial hypothesis.

6. Treating clusters as static

User intent evolves. Someone might start as a “Personal Productivity Optimizer” and grow into a “Client Project Coordinator” as their business scales. Review and refine your clusters quarterly based on new data, product changes, and market shifts.

7. Personalizing too aggressively too soon

Start with high-confidence, low-risk personalization (like targeted email content) before you completely diverge user experiences. You want to validate that clusters behave differently before you build entirely separate onboarding flows.

8. Forgetting to measure impact

Intent-based segmentation is valuable only if it improves outcomes. Define success metrics upfront (e.g., retention lifts, engagement depth, upgrade rates, support ticket reduction) and track them by cluster. If personalization isn’t moving these metrics, refine your approach.

Making intent-based segmentation work for your organization

The framework we’ve outlined works across product categories and company sizes, but implementation varies based on your resources and organizational maturity.

If you have limited data: Start with Phase 1 and Phase 2, using qualitative research to define clusters before investing in behavioral infrastructure. You can manually tag users based on interview responses and onboarding surveys, then personalize through targeted emails and customer success outreach. As you grow, build the data systems to automate cluster identification.

If you have rich behavioral data but limited research capabilities: Reverse the order. Start with data patterns and validate through targeted interviews. Look for natural groupings in your analytics that suggest different workflow types, then talk to representative users from each group to understand their intent.

If you’re a small team: Don’t let perfect be the enemy of good. Start with 3-4 obvious clusters based on your highest-level workflow differences. The founder of a 10-person startup probably has a better intuitive understanding of user intent than a 500-person company with siloed data. Write down what you know, test it with a few users, and start personalizing.

If you’re a large enterprise: The challenge is getting organizational alignment, not defining clusters. Use Phase 1 to surface where teams already operate with different mental models, then use data to arbitrate. Create executive sponsorship for the new framework so it becomes the shared language across product, marketing, and CS.

The key is starting somewhere. Most companies know their one-size-fits-all approach isn’t working, but they keep personalizing around the wrong variables that don’t actually predict what users are trying to accomplish.

Intent-based segmentation reorients everything around the question that actually matters: What is this user trying to accomplish, and how can we help them succeed at that specific goal?

Turn insights into retention that drives revenue

Understanding user intent is just the first step. The real value comes from translating those insights into personalized experiences that keep users engaged and drive measurable revenue growth.

At The Good, we’ve spent 16 years helping SaaS companies identify their most valuable user segments and optimize experiences around what actually drives retention. Our systematic approach to user segmentation goes beyond frameworks. We help you implement experimentation strategies that prove which personalization efforts move the needle on the metrics your board cares about.

Plenty of companies struggle to implement segmentation that’s actually actionable. They end up with beautiful personas gathering dust or broad categories that don’t inform product decisions.

Intent-based segmentation is different because it connects directly to behavior you can observe and experiences you can personalize.

If you’re struggling with generic experiences that fail to resonate with different user types, or if you know your segmentation could be better but aren’t sure where to start, let’s talk about how intent-based segmentation could transform your retention strategy and drive revenue growth.

Now It’s Your Turn

We harness user insights and unlock digital improvements beyond your conversion rate.

Let’s talk about putting digital experience optimization to work for you.

About the Author

Maggie Paveza

Maggie Paveza is a Strategist at The Good. She has years of experience in UX research and Human-Computer Interaction, and acts as an expert on the team in the area of user research.