How To Make User-Centered Decisions When A/B Testing Doesn’t Make Sense

Learn how adding rapid testing to your toolkit helps to accelerate decision-making, build confidence, and drive value.

The right tool for the right job. It’s a principle that applies everywhere, from construction sites to surgical suites, yet for digital product development, many teams are singularly focused on A/B testing.

Don’t get me wrong, A/B testing is incredibly powerful. It’s the gold standard for high-stakes, high-traffic decisions where statistical significance matters most. But when it becomes your only tool, you create unnecessary constraints that can paralyze decision-making and slow innovation.

The reality is that different decisions require different levels of rigor, confidence, and investment. Luckily, there is a complementary approach that fills critical gaps in your experimentation toolkit. By understanding when each method is most appropriate, teams can make faster, more informed optimizations while maintaining the rigor needed for their most high-stakes decisions.

Creating “experience rot”

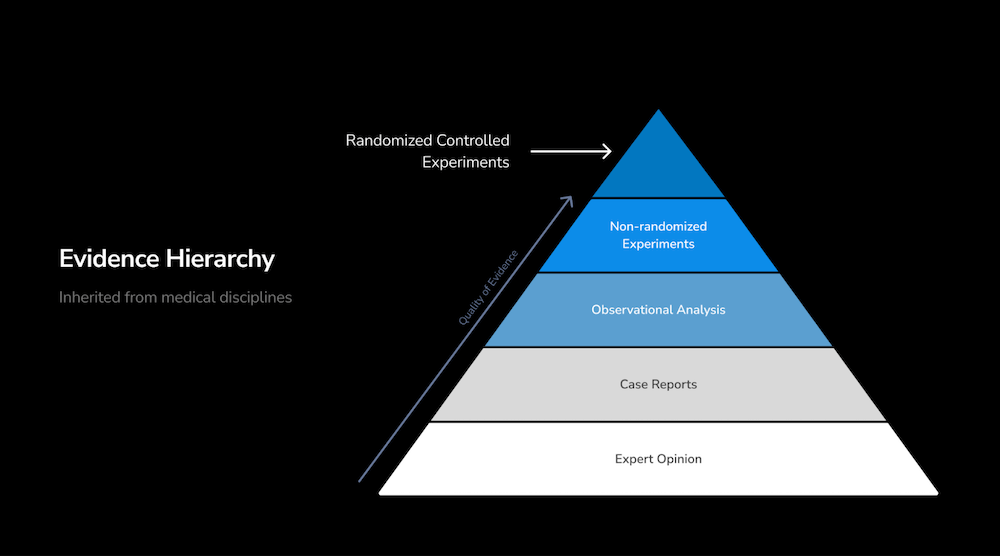

A/B testing borrowed its methodology from medical intervention studies, where 95% confidence intervals and statistical significance aren’t just nice-to-haves; they’re life-or-death requirements.

But we’re not rocket scientists, and we don’t always need the same level of assurance in product decisions to move towards the right outcome.

A/B testing can be overkill for the decisions product teams need to make daily. Yet teams have become so committed to this single methodology that they’ve created what researcher Jared Spool calls “Experience Rot,” the gradual deterioration of user experience quality from teams moving too slowly or focusing solely on economic outcomes.

The costs of slow testing cycles are tangible and measurable:

- Market opportunities disappear while waiting for test results

- Competitors gain ground during lengthy testing phases

- Development resources get tied up in prolonged testing initiatives

- Customer frustration builds as issues remain unfixed

- Decision fatigue sets in as teams debate what to test next

But the problem runs deeper than just speed. Many teams face contexts where A/B testing simply isn’t feasible. Regulatory challenges in healthcare and finance, low-traffic scenarios for B2B products, technical constraints, and organizational politics all create barriers to traditional experimentation.

By the time a test idea passes through all the bureaucratic loopholes and oversight at an organization, it’s often no longer lean enough to justify testing. Without an alternative testing method, teams are left without any data at all.

So, how do we:

- Circumvent the challenges of A/B testing, and

- Prevent experience rot?

Enter rapid testing

Rapid testing isn’t about cutting corners or accepting lower-quality insights. It’s about matching your research method to the decision you’re trying to make, rather than forcing every question through the same rigorous, but often slow, process.

Like A/B testing, rapid testing helps you understand if your solutions are working. Unlike A/B testing, rapid tests are conducted with smaller sample sizes, completed in days rather than weeks or months, and often provide qualitative insights that A/B tests can miss.

“The speed at which we obtain actionable findings has been impressive,” says Gabrielle Nouhra, Software Director of Product Marketing, who leverages rapid testing with The Good for research and experimentation. “We are receiving rapid results within weeks and taking immediate action based on the findings.”

The key is understanding when each approach makes sense. Not every decision requires the same level of rigor, and smart product teams create systems that allow critical insights to move faster.

Enjoying this article?

Subscribe to our newsletter, Good Question, to get insights like this sent straight to your inbox.

A framework for decision making

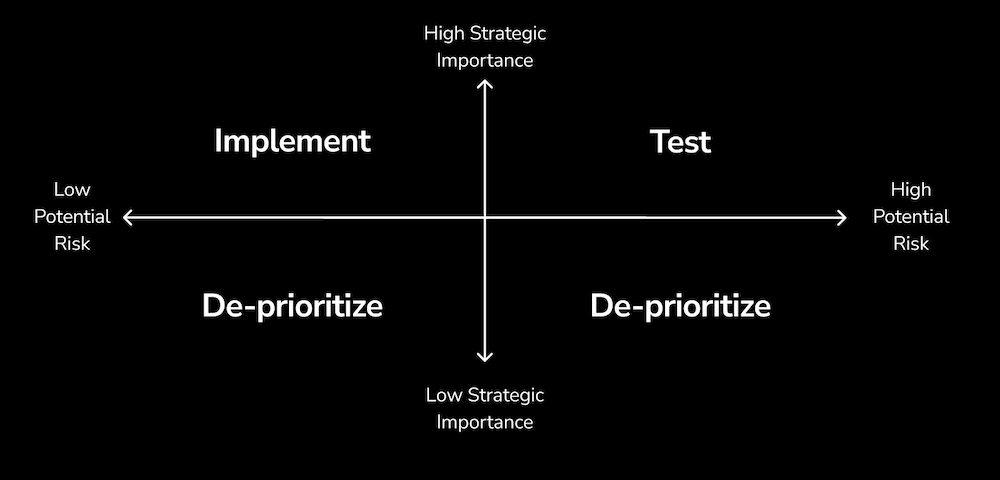

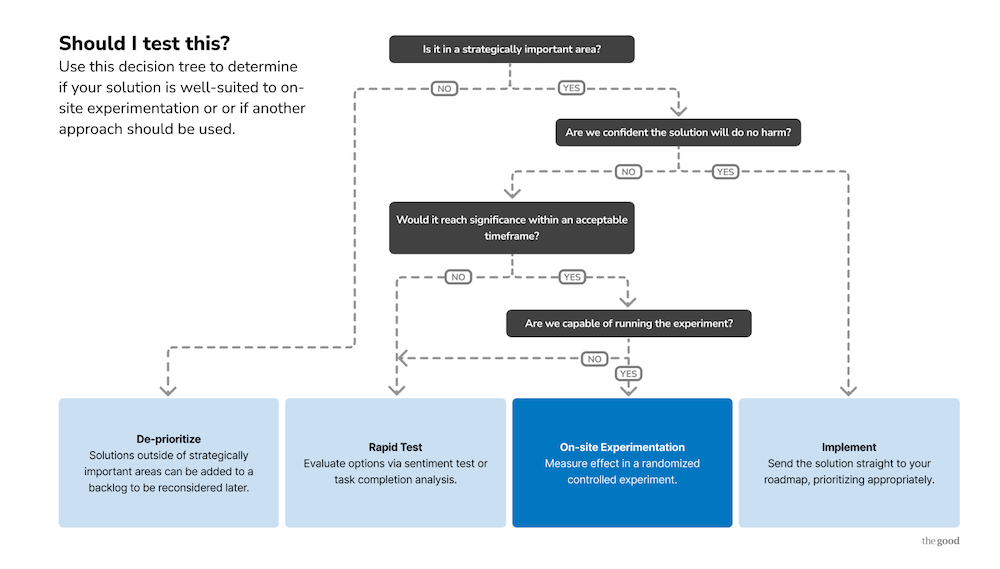

So, how do you decide when to use rapid testing versus A/B testing? The decision starts with two critical questions: Is this strategically important? And what’s the potential risk? With those two questions in mind, you can map your ideas on a simple 2×2.

High Strategic Importance + Low Risk = Just Ship It. If you can’t explain meaningful downsides to a change but know it’s strategically important, you probably don’t need to test it at all. These are your quick wins.

Low Strategic Importance = Deprioritize. Not everything needs to be tested. Some changes simply aren’t worth the time and resources, regardless of the method you use.

High Strategic Importance + High Risk = Test Territory. This is where both A/B testing and rapid testing live. The next decision point becomes: Can you reach statistical significance within an acceptable timeframe? Are you technically capable of running the experiment?

If the test isn’t technically feasible or traffic constraints make the time-to-significance longer than is acceptable, rapid testing becomes your best option for de-risking the decision.

Rapid testing in practice

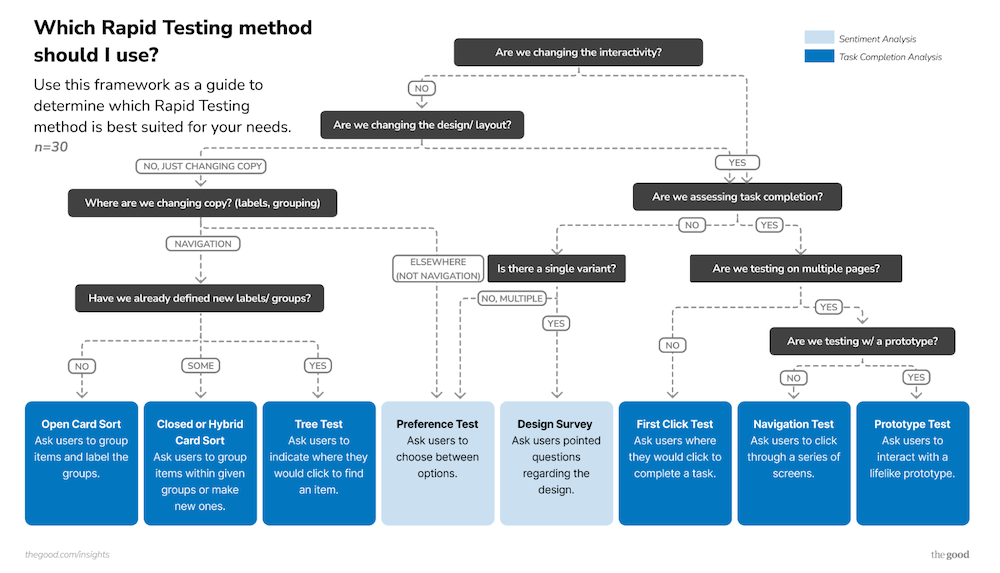

Rapid testing encompasses various methodologies, each suited to different types of questions. Here are just a few examples:

First-Click Testing helps confirm where users would naturally click to complete a task. Perfect for interface design decisions and navigation optimization.

Preference Testing goes beyond simple A/B comparisons to evaluate multiple options, often six to eight variations, helping teams understand which labels, designs, or approaches resonate most with their target audience.

Tree Testing reveals where users might stray from their intended path, using nested structures to understand navigation behavior without the distraction of full visual design.

The beauty of these methods lies in their speed and specificity. Rather than testing entire page redesigns, rapid testing allows you to validate specific hypotheses quickly. Which onboarding segments will users self-identify with? Where should we place a new feature to maximize engagement? Which design elements increase trust among new visitors?

Rapid tests can also guide our A/B testing strategy. If we’re entertaining multiple options for new nomenclature within an app experience and we’re just trying to understand which label users think would be most accurate or most likely to represent those outcomes, running a rapid test can narrow down those options and help us decide what to A/B test.

Building a rapid testing practice

Implementing rapid testing effectively requires more than just choosing the right method. Teams that see the best results follow several key principles:

- Impact pre-mortems: Before testing, clearly define what success looks like and what impact you expect if implemented. This helps connect testing activities to business outcomes and prevents post-hoc justification of results.

- Acuity of purpose: Keep tests focused on specific questions rather than trying to evaluate everything at once. A/B testing often encourages comprehensive evaluations, but rapid testing works best with precise hypotheses.

- Pre-defined success criteria: Establish clear benchmarks before you start testing. If 80% of users can complete a task, is that a win? What about 60%? Define these thresholds upfront to avoid moving goalposts when results come in.

- Mute context: When testing specific elements, remove unnecessary context that might distract from the core question. Full-page designs can overwhelm participants and dilute feedback on the element being tested.

- Sunlight: Even experienced researchers benefit from collaborative review of test plans. Transparency builds confidence in the process, and a peer review of test designs helps identify potential issues before execution.

- Share: Circulate your impact, what you’ve learned about your audience, and get people excited about the work. The goal is to build visibility, create a case for why this work is valuable, and encourage people to make decisions with data.

The compound effects of speed

Teams that successfully implement rapid testing alongside their existing A/B testing programs see remarkable results. Our clients report 50% improved A/B test win rates, better customer satisfaction scores, and significantly faster time-to-insights.

But perhaps most importantly, they report better team morale. There’s something energizing about seeing results from your work quickly, about being able to iterate and improve based on real user feedback rather than lengthy committee discussions.

It’s never too late to pivot. The idea is to move from long-term decision making, where we send something through the whole development and design cycle only to come up with a lackluster outcome, to form a process that helps us get quick, early signals.

Making the transition

The goal isn’t to replace A/B testing. It remains the gold standard for high-stakes, high-traffic decisions. But by adding rapid testing to your toolkit, you can accelerate the decisions that don’t require months of statistical validation while still maintaining confidence in your choices.

As decision scientist Annie Duke writes in Thinking in Bets, “What makes a great decision is not that it has a great outcome. It’s the result of a good process.” Rapid testing gives teams a process for rational de-risking that emphasizes both speed and quality.

The question isn’t whether you should test your ideas; it’s whether you’re using the right testing method for each decision. In a world where speed increasingly determines competitive advantage, teams that master this balance will consistently outpace those stuck with only one tool in their kit.

Ready to accelerate your decision-making process? Our team specializes in helping product teams implement rapid testing alongside existing experimentation programs. Get in touch to learn how we can help you cut testing time without sacrificing insight quality.

About the Author

Natalie Thomas

Natalie Thomas is the Director of Digital Experience & UX Strategy at The Good. She works alongside ecommerce and product marketing leaders every day to produce sustainable, long term growth strategies.